Introduction

Advances in LLMs have led to breakthroughs in tasks like reasoning, learning from experience, and following instructions. Yet, despite these advances, the overwhelming focus on English corpora has limited LLMs' abilities in other languages.

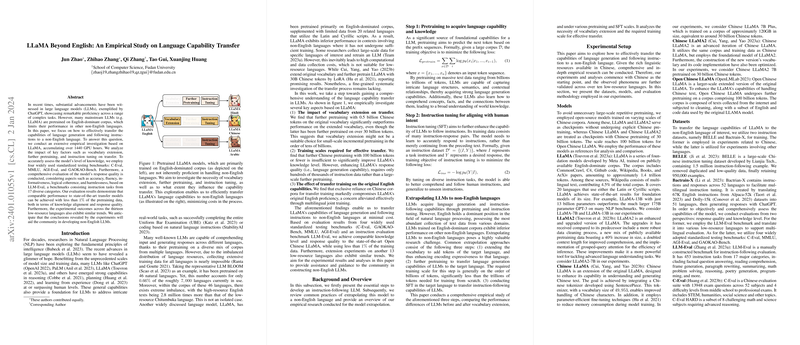

This paper explores methods for transferring the capabilities of LLMs, specifically LLaMA, to non-English languages with minimal cost. Using extensive GPU resources, the research evaluates vocabulary extension, additional pretraining, and instruction tuning as key factors influencing the transfer process. Testing on both knowledge benchmarks and instruction-following tasks provides a holistic assessment of the model's language capabilities.

Analyzing Transfer Factors

The paper reveals unexpected findings regarding vocabulary extension. Despite theories suggesting its usefulness, extending the vocabulary displays no clear advantage in transferring language capabilities. Surprisingly, vocabulary-extended models pre-trained with 30 billion tokens perform worse than LLaMA models trained on just 0.5 billion tokens.

In terms of training scales, the results indicate that for improving language generation capabilities like fluency and logical coherence, a substantial volume of further pretraining isn't as crucial as a significant amount of instruction tuning. However, in terms of model knowledge like factual accuracy, neither additional pretraining on Chinese nor expanding the vocabulary greatly impacts the LLaMA models' performance.

Maintaining English Proficiency

Another aspect considered is the impact of focused language transfer training on a LLM's original English capabilities. Models exclusively trained with Chinese data demonstrate a reduction in English proficiency, which suggests a trade-off between learning a new language and maintaining existing capabilities. The solution appears to lie in multilingual joint training, which helps preserve English skills while extending to new languages.

Expanding to Multiple Languages

This research also extends its findings beyond Chinese, encompassing 13 low-resource languages to validate the transfer process's effectiveness across diverse linguistic landscapes. The results are consistent, showcasing that the LLaMA model can quickly adapt to new languages with suitable instruction tuning, regardless of the resource level of the target language.

Conclusion and Implications

Overall, the paper concludes that effective language capability transfer to non-English languages can be achieved with significantly less data than previously thought necessary. The research also underlines the internalized cross-lingual alignment in LLMs, observed through code-switching instances in the model's responses, which may play a role in the transferability of language capabilities. These insights have the potential to guide the development of more capable and efficient multilingual LLMs, lowering the barriers for languages with fewer resources.