Proposed Techniques for Extreme Image Compression Utilizing Latent Feature Guidance and Diffusion Models

Introduction to Extreme Image Compression

Image compression, an essential procedure for efficient data transmission and storage, has seen practical deployment through various standards like JPEG2000 and VVC. However, these conventional methods falter at extremely low bitrates by producing visually unappealing compression artifacts or overly smooth images. Addressing this challenge, recent advancements in deep learning have steered toward leveraging generative models to enhance compression at low bitrates significantly.

Methodology

This paper introduces a nuanced method for image compression at below 0.1 bits per pixel (bpp), leveraging compressive Variational Autoencoders (VAEs) and pre-trained diffusion models infused with external content-guided modulation. This hybrid approach comprises two main components:

- Latent Feature-Guided Compression Module (LFGCM): Utilizing compressive VAEs, this module initially encodes and compresses input images into content variables, preparing them for subsequent decoding. It introduces external guidance to align these variables better with the diffusion spaces, employing transform coding paradigms for initial data reduction.

- Conditional Diffusion Decoding Module (CDDM): This module decodes content variables into images, employing a pre-trained stable diffusion model fixed during training to leverage its powerful generative properties for better image reconstruction. It innovatively injects content attributes through trainable control modules, refining output quality.

Empirical Validation

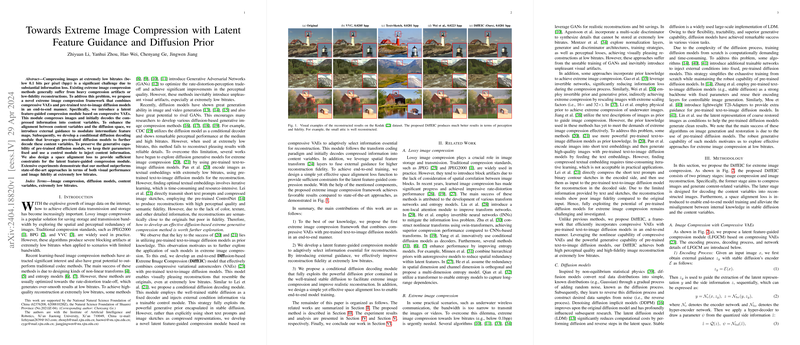

In evaluating this model, extensive experiments across standard datasets like Kodak, Tecnick, and CLIC2020 have demonstrated superior performance over existing methods, particularly in preserving perceptual quality and fidelity at extremely low bitrates. Notably, the method described outperforms contemporary approaches in terms of visual performance metrics such as LPIPS, FID, and KID, particularly excelling in scenarios where the bit-rate constraints are stringent.

- Quantitative Performance: The proposed method markedly enhances bitrate savings while maintaining compelling image quality, signifying improvements over both traditional codecs and recent deep learning-based methods.

- Qualitative Assessments: Visual comparisons further substantiate the quantitative findings, with the proposed method consistently delivering visually pleasing and detailed reconstructions even at bitrates lower than 0.1 bpp.

Future Perspectives

The fusion of deep learning models for compression, specifically utilizing the generative prowess of diffusion models, marks a promising advancement in the field of image and video codecs. Future studies might explore:

- Further integration with text-to-image capabilities of diffusion models to enhance semantic fidelity.

- Reduction of computational demand and inference time to adapt this methodology for broader, real-time applications.

Conclusion

This research delineates a novel framework for extreme image compression using a combination of compressive autoencoders and diffusion-based decoders enhanced by latent feature guidance. By setting new benchmarks in visual and quantitative metrics at ultra-low bitrates, it paves the way for future developments in efficient and high-quality image compression technologies.