Extreme Video Compression Using Pre-trained Diffusion Models

The paper "Extreme Video Compression With Prediction Using Pre-trained Diffusion Models" presents an innovative approach to video compression by leveraging diffusion-based generative models at the decoder. The approach targets ultra-low bit-rate video reconstruction with high perceptual quality. This endeavor is motivated by the significant increase in video data transmission, particularly due to emerging technologies like augmented reality, virtual reality, and the metaverse. The proposed method introduces a significant shift from conventional video compression methods such as H.264 and H.265, which primarily rely on hand-engineered optical flow and motion compensation techniques.

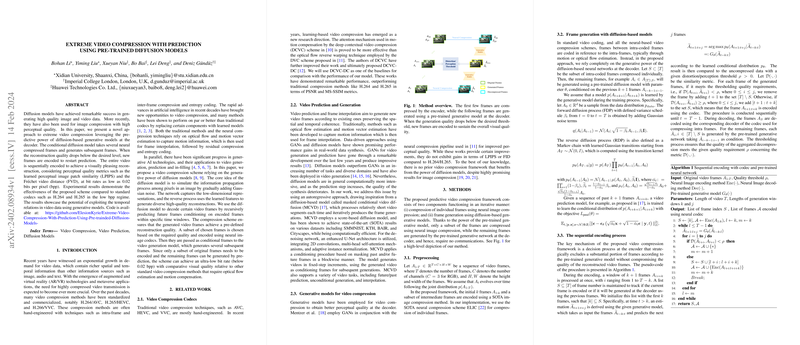

The core strategy utilizes pre-trained diffusion models, specifically leveraging their predictive capabilities to minimize the bit-rate required for transmitting video data. This encoding method selectively compresses only a subset of video frames using neural image compressors, while the remaining frames are predicted at the decoder using a diffusion-based generative model. This innovative technique facilitates achieving a bit-rate as low as 0.02 bits per pixel (bpp), which is significantly lower compared to traditional codecs.

The experimental evaluation across multiple datasets, including Stochastic Moving MNIST (SMMNIST), Cityscapes, and the Ultra Video Group (UVG) dataset, demonstrates robust performance of this approach. Notably, compared to standard codecs such as H.264 and H.265, the method achieves superior performance in terms of perceptual metrics like LPIPS and FVD, while maintaining compatible results in terms of PSNR. Particularly in datasets with significant motion and texture variation, the method outperforms traditional codecs, achieving more visually pleasing results, despite operating at ultra-low bpp.

From a theoretical standpoint, this approach underscores the potential of generative AI in optimizing data transmission by exploiting spatial and temporal video coherence without explicit motion estimation. Practically, this methodology can substantially reduce the bandwidth requirements for video streaming and broadcasting, making it highly relevant for applications involving immersive media technologies. One limitation, however, is the increased computational demand at the encoding stage due to the additional video prediction processing. Future work could explore more efficient prediction models to offset this complexity.

In conclusion, the paper offers significant insight into how pre-trained diffusion models can revolutionize video compression, aligning with broader trends in AI-driven methodologies for multimedia processing. The potential for further advancements in this domain is considerable, with implications for improved efficiency in broadcasting, streaming services, and storage solutions. The exploration of alternative or improved generative architectures may further enhance the performance, ensuring scalability and adaptability to various practical scenarios.