Exploring Localization in Multimodal LLMs through Groma: Localized Visual Tokenization for Enhanced Grounded Understanding

Introduction

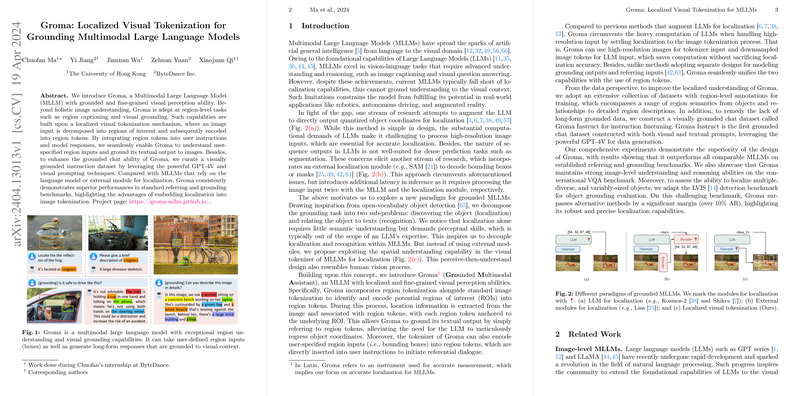

Recent advancements in Multimodal LLMs (MLLMs) have significantly broadened their applicability across various AI domains, merging visual perception with linguistic components. Despite these progresses, the fulcrum of challenges in MLLMs nests within the precise localization and grounding of textual content to specific image regions. Addressing this, Ma et al. introduce Groma, an MLLM that utilizes localized visual tokenization to ameliorate grounding capabilities in multimodal contexts.

Model Architecture

Groma is constituted of several key components meticulously designed to streamline the integration of visual and textual modalities:

- Image Encoder: Utilizing DINOv2, the model processes high-resolution images, subsequently downsampling the visual tokens for efficient processing without compromising localization accuracy.

- Region Proposer: A Deformable DETR transformer is employed to generate region proposals efficiently, ensuring robust detection across a range of objects and scenes.

- Region Encoder: This module encodes the region proposals into discrete tokens, providing direct anchors for textual descriptions within the image, thereby facilitating a precise grounding mechanism.

- LLM: Leveraging Vicuna-7B, Groma integrates visual tokens into textual responses, enabling comprehensive and context-aware multimodal understanding.

Empirical Evaluation

The Groma model showcased significant superiority in localization tasks especially, when evaluated against benchmarks such as RefCOCO, RefCOCO+, and RefCOCOg. The model not only excelled in generating contextually and spatially accurate textual descriptions but also demonstrated robust capabilities on complex datasets like LVIS, designed to test object recognition and localization across diverse and cluttered scenes.

Training Strategy

The authors propose a three-stage training strategy:

- Detection Pretraining: Focuses on leveraging extensive detection datasets to refine the capabilities of the region proposer.

- Alignment Pretraining: Aims at aligning the vision-LLM by utilizing a mixture of image-caption, grounded caption, and region caption datasets.

- Instruction Finetuning: Utilizes high-quality datasets and instruction-based tasks to enhance the model's performance in instruction-following contexts.

Implications and Future Work

The introduction of Groma pushes the boundaries of what MLLMs can achieve in terms of region-specific understanding and grounding. This model sets a precedent for how localized visual tokenization can facilitate more nuanced interactions between visual and textual data, leading to better performances in applications requiring detailed visual understanding.

Furthermore, the methodological innovations in Groma provide a pathway for future research, potentially leading to more sophisticated architectures that could seamlessly integrate even more diverse modalities beyond vision and text, such as audio or sensory data. The training strategies outlined could also serve as a blueprint for developing more robust and context-aware AI systems, particularly useful in domains like autonomous driving, robotic navigation, and interactive educational technologies.

Conclusion

Groma represents a significant step forward in the development of MLLMs with fine-grained visual perception abilities. By embedding localization directly into the tokenization process, Groma not only enhances the model's efficiency but also its efficacy in understanding and interacting with the visual world in a contextually relevant manner. The strides made in this research illuminate promising directions for future advancements in AI, fueling further exploration into the capabilities of multimodal systems.