Overview of Kosmos-2: Grounding Multimodal LLMs to the World

The paper introduces Kosmos-2, a Multimodal LLM (MLLM) designed to enhance the capabilities of grounding LLMs with visual inputs. Kosmos-2 stands out by integrating object and region recognition directly into LLMs, allowing for seamless interaction between text and visual data.

Key Contributions

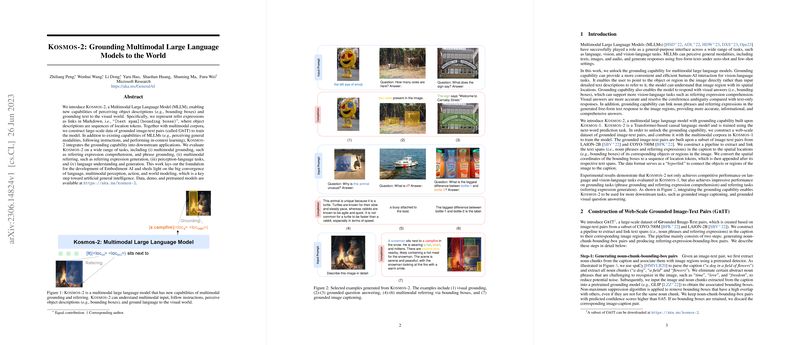

The authors have developed Kosmos-2 to incorporate grounding and referring capabilities by using a novel approach that represents referential expressions through Markdown-style links connecting text spans to bounding boxes in images. This is achieved using a dataset called GrIT, consisting of grounded image-text pairs constructed from multimodal corpora.

Methodology

Kosmos-2 builds on previous MLLMs, advancing their ability to associate text directly with specific visual elements:

- Grounded Image-Text Pair Creation: Using sources like LAION-2B and COYO-700M, the authors created a large-scale dataset, GrIT, by identifying and linking noun phrases in captions to image regions.

- Model Architecture: Kosmos-2 utilizes a Transformer-based architecture, trained with both traditional text inputs and grounded image-text pairs to predict next-word tokens.

- Input Representation: The model connects text spans to visual data using a sequence of tokens representing bounding box coordinates, which are then integrated into the text input via a Markdown-style hyperlink format.

Evaluation

Kosmos-2 was evaluated across a broad spectrum of tasks to demonstrate its enhanced grounding capabilities:

- Multimodal Grounding: Phrase grounding and referring expression comprehension tasks were used to quantify the model's proficiency in linking text to specific visual regions.

- Multimodal Referring: The model was also assessed on its ability to generate descriptive text based on specified image regions, showcasing its capability to interpret visual information.

- Perception-Language Tasks: These included standard image captioning and visual question answering, where the model's results were competitive with existing models.

- Language Tasks: Evaluations confirmed Kosmos-2's capability in handling traditional language tasks, maintaining performance while integrating new capabilities.

Numerical Performance

On the phrase grounding task with the Flickr30k Entities dataset, Kosmos-2 achieved R@1 scores that were competitive with traditional models, notably surpassing models that require fine-tuning. Zero-shot performance on referring tasks also evidenced strong competency, particularly on datasets like RefCOCOg.

Implications and Future Directions

The introduction of grounding capabilities marks a significant step in the progression towards more sophisticated artificial intelligence systems capable of complex multimodal tasks. The potential applications span interactive AI systems, enhanced image captioning, and refined visual question answering. Future considerations include refining the model's understanding of varied human expressions and extending its capabilities to more diverse and nuanced multimodal datasets.

Kosmos-2 presents an innovative approach to the intersection of language and visual perception, setting the groundwork for future developments towards artificial general intelligence where LLMs can seamlessly integrate and interact with the visual world.