Overview of Recent AI Agent Implementations and Architectures

Introduction

Recent advancements in AI agent implementations have notably expanded the functionality of AI systems, particularly in enhancing reasoning, planning, and tool execution capabilities. This paper reviews both single-agent and multi-agent architectures, discussing their design choices, capabilities, and impact on achieving complex objectives.

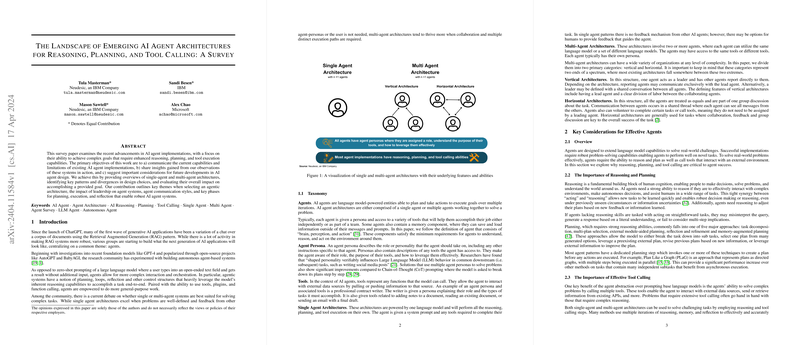

Single-Agent vs Multi-Agent Systems

The research highlights core differences between single-agent and multi-agent systems, their suitability for various tasks, and the influence of architectural choices on goal accomplishment.

Single-Agent Architectures

Single-agent architectures typically handle tasks independently where interaction with other agents or personalities is unnecessary. These systems excel in environments where tasks are well-defined and feedback loops from external agents aren't crucial. Despite the simplicity and efficiency of single-agent systems in specific scenarios, they face challenges in adapting to new, undefined conditions or in escaping ineffective operational loops without external input.

Multi-Agent Architectures

In contrast, multi-agent architectures thrive in complex environments requiring collaborative effort and diverse input to accomplish tasks. Such systems benefit significantly from interactions among multiple agents, which collectively contribute to a more robust and adaptable solution. However, the complexity of coordinating between multiple agents introduces challenges such as maintaining efficient communication and preventing conflict or redundancy in tasks.

Impact of Design Choices

The paper discusses several critical design elements that influence the effectiveness of AI agents. These include the leaders' role within systems, methods of agent communication, and operational phases like planning, execution, and reflection.

Leadership and System Design

Leadership within multi-agent systems is crucial for coordinating efforts and maintaining a clear task structure among agents. Systems with a defined leadership hierarchy tend to perform better by reducing operational redundancy and focusing the group's efforts.

Communication Strategies

Agent communication styles—whether hierarchical or egalitarian—significantly affect the system’s operation. Vertical communication systems simplify command structures and reduce conflict potential, while horizontal systems may encourage innovation and adaptability by allowing free information flow among agents.

Operational Phases

The phases of planning, execution, and reflection are critical in all AI agent architectures. Robust systems tend to feature well-defined phases that guide the agents through task completion, allowing for adjustments based on feedback and reflective observations.

Future Directions in AI Agent Research

Looking forward, the paper suggests several avenues for enhancing AI agent architectures:

- Enhanced Agent Reasoning: Improving the reasoning capabilities of agents to handle more complex, multi-faceted problems can lead to broader applicability in real-world scenarios.

- Dynamic Agent Configurations: Systems that can adjust their agent configurations dynamically in response to task requirements or environmental changes could be more efficient and adaptable.

- Robust Multi-Agent Coordination: Developing more sophisticated methods for agent coordination and communication can prevent inefficiencies and improve outcomes in systems where multiple agents interact.

Conclusion

AI agent architectures have evolved significantly, presenting new opportunities to tackle complex and dynamic problems. Both single-agent and multi-agent systems have their merits and are preferable under different circumstances. The continued development and refinement of these systems will likely focus on enhancing adaptability, reasoning capabilities, and the efficiency of system-wide coordination. Further research in these areas will pave the way for more capable, versatile AI agents, potentially transforming how tasks are approached and executed across various domains.