Evaluating the Impact of LLMs on Programmer Productivity through RealHumanEval

Introduction

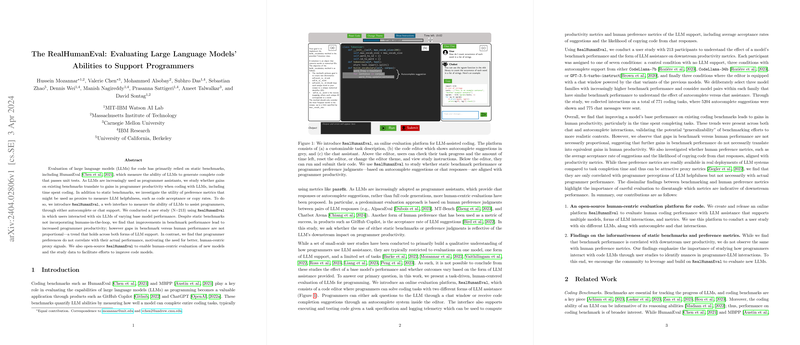

Recent advancements in LLMs have led to their increasing adoption as tools to aid programmers in various tasks, ranging from autocomplete functionalities to answering queries via chat interfaces. While static benchmarks have been instrumental in gauging the capabilities of these models in generating syntactically correct and logically sound code, there is a growing interest in understanding how these enhancements translate into real-world productivity gains for programmers. This paper introduces RealHumanEval, a comprehensive framework designed to evaluate the effectiveness of LLMs in improving programmer productivity through a human-centered approach.

The RealHumanEval Framework

RealHumanEval provides a platform allowing programmers to interact with LLMs in two primary modes: autocomplete and chat-based assistance. The framework facilitates measuring various performance metrics such as task completion time and acceptance rates of model suggestions, offering insights into the practical utility of LLMs in programming contexts. It also enables an assessment of the correlation between programmers' preferences for specific LLM interventions and their actual performance improvements.

User Study Methodology

A user paper conducted with 213 participants highlights the utility of RealHumanEval in examining the impact of different LLMs on programmer productivity. Participants were divided into groups receiving either no LLM support, autocomplete support, or chat-based support from one of six different LLMs of varying performance levels on static benchmarks. The paper's design allowed for a nuanced analysis of how LLM assistance, benchmark performance, and programmer preferences contribute to productivity in real-world programming tasks.

Key Findings

- Benchmark Performance and Productivity: The paper reveals a positive correlation between an LLM's performance on static benchmarks and its ability to enhance programmer productivity, particularly in reducing the time spent on coding tasks. However, this correlation is not necessarily linear, indicating diminishing returns in productivity gains with further improvements in benchmark performance.

- Programmer Preferences: Contrary to expectations, the paper finds no significant correlation between programmers' preferences for certain types of LLM support (e.g., acceptance rates of autocomplete suggestions) and actual improvements in productivity metrics such as task completion times.

- Impact of LLM Assistance Type: While both autocomplete and chat-based supports were found to improve productivity compared to no LLM support, there were notable differences in programmer perceptions of their utility. Interestingly, chat-based assistance received higher helpfulness ratings from participants, despite similar productivity gains observed with autocomplete support.

- Task Type Sensitivity: The paper also highlights how the effectiveness of LLM assistance varies across different types of programming tasks, with data manipulation tasks benefiting more from LLM support compared to algorithmic problem-solving tasks.

Implications and Future Directions

The findings underscore the importance of considering human-centric measures and direct productivity metrics in evaluating LLMs for programming support, beyond static benchmark performance. RealHumanEval's open-source availability promises to facilitate further research in this direction, enabling the exploration of new models and interaction paradigms. Future work could focus on enhancing LLMs' context understanding capabilities, personalizing the timing and nature of interventions, and developing more refined mechanisms for integrating LLM assistance into programming workflows.

Conclusion

Through the development and deployment of RealHumanEval, this paper provides valuable insights into the complex dynamics between LLM benchmark performance, programmer preferences, and real-world productivity. As LLMs continue to evolve, frameworks like RealHumanEval will play a critical role in guiding their development towards maximizing tangible benefits for programmers.