Deciphering the Multilingual Capabilities of Decoder-Based Pre-trained LLMs Through Language-Specific Neurons

Introduction

In the exploration of multilingual abilities within pre-trained LLMs (PLMs), particularly those that are decoder-based, a novel paper probes the internal mechanics of these models in handling multiple languages. This research dissects the presence and functionality of language-specific neurons within decoder-only PLMs, focusing on the distribution and impact of these neurons across a range of languages and model architectures. Unlike prior studies that mainly centered on language-universal neurons and encoder-based PLMs, this work explores the critical analysis of how specific neurons cater to individual languages, therefore elaborating on the intricate neuronal activity that facilitates multilingual text generation.

Methodology

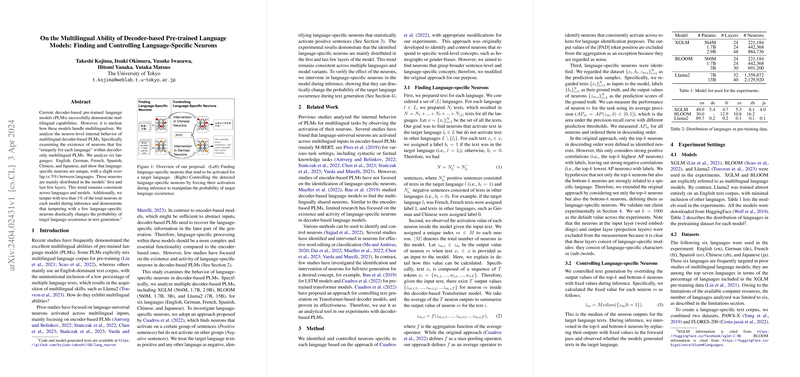

The paper adopts a refined approach originally proposed by \citet{cuadros2022self} for identifying neurons that respond significantly to inputs of a particular language. Identifying language-specific neurons involved two main phases: preparation of language-specific text corpuses for six languages (English, German, French, Spanish, Chinese, and Japanese) and observation of neurons' activation patterns in response to these texts. Neurons that showed a high activation for texts in a target language (positive sentences) and low activation for other languages (negative sentences) were flagged as language-specific. This neuron identification process was a critical step toward understanding how decoder-based PLMs process multilingual input.

Results

Analyzing various decoder-based PLMs, including XGLM, BLOOM, and Llama2 models, for the specified languages yielded consistent patterns. Language-specific neurons predominantly reside in the models' first and last few layers, with a minimal overlap of less than 5% between languages. The research highlights an intriguing uniformity across multiple languages and model variants in the distribution of these critical neurons.

Furthermore, tampering with these neurons during inference demonstrated a significant shift in the generated text's language, validating the substantial role of language-specific neurons in directing the model's output language. These interventions could successfully manipulate the language of the generated text, showcasing a tangible application of the findings.

Implications and Future Directions

The implications of this research are multifold, touching on theoretical and practical aspects of working with multilingual PLMs. Theoretically, it provides a novel lens to view the internal workings of decoder-based PLMs, enriching our understanding of multilingual text generation. Practically, identifying and manipulating language-specific neurons paves the way for enhanced control over LLMs, particularly in tasks requiring precise language output.

The avenue for future research is vast, with possibilities ranging from the development of language-specific model compression techniques to innovative fine-tuning approaches that could enhance models' performance on languages not included in their training data. Moreover, extending this analysis to a broader range of languages and model architectures, including encoder-decoder frameworks, could further deepen our understanding of PLMs' multilingual capabilities.

Conclusion

This paper marks a significant step in unraveling the complexities of multilingual text generation within decoder-based PLMs, spotlighting the underexplored terrain of language-specific neurons. By methodically identifying and demonstrating the functionality of these neurons across different languages and model variants, the research offers valuable insights and opens new pathways for leveraging the multilingual capacities of PLMs more effectively.