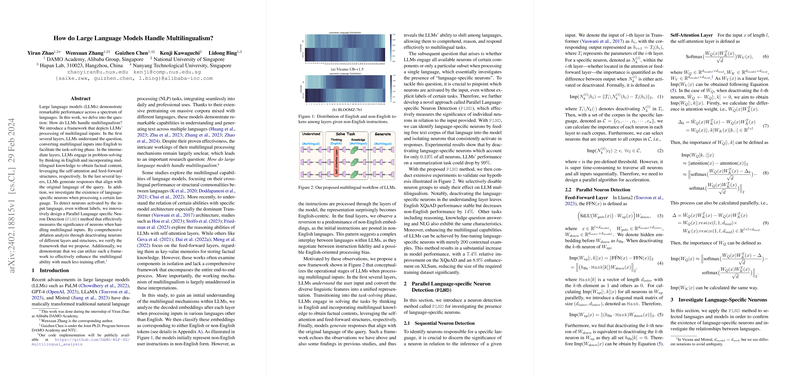

LLMs handle multilingualism through a structured process that leverages different layers of their architecture for understanding, processing, and generating text in multiple languages. This process, as detailed in "How do LLMs Handle Multilingualism?" (Zhao et al., 29 Feb 2024 ), involves a multilingual workflow (MWork) and is validated using Parallel Language-specific Neuron Detection (PLND).

Multilingual Workflow (MWork) in LLMs

The MWork framework posits that LLMs manage multilingual inputs via three distinct stages, each localized to specific layers within the model:

- Understanding (Initial Layers): The initial layers are responsible for converting multilingual inputs into a unified representation, effectively translating them into English for subsequent processing. This translation facilitates a common ground for task-solving, irrespective of the input language.

- Task-Solving (Intermediate Layers): The intermediate layers primarily operate in English, utilizing self-attention and feed-forward networks. Self-attention mechanisms are employed for reasoning, while feed-forward networks integrate multilingual knowledge to enrich the factual content. This stage is pivotal for the LLM's ability to "think" and derive solutions.

- Response Generation (Final Layers): The final layers generate responses in the original language of the query. This involves translating the English-centric thought process back into the user's language, ensuring coherent and contextually relevant outputs.

Parallel Language-specific Neuron Detection (PLND)

To empirically validate the MWork framework, the paper employs PLND, a novel method for identifying and quantifying the significance of individual neurons in relation to the input language, without relying on explicit task labels. PLND involves feeding a free text corpus of a specific language into the model and isolating the neurons that consistently activate.

The PLND method is mathematically defined for both Feed-Forward and Self-Attention layers. For the Feed-Forward Layer in Llama2, the importance of a neuron is quantified as the difference in the output when the specific neuron of is either activated or deactivated, calculated efficiently in parallel using a diagonal mask matrix. Similarly, for the Self-Attention Layer, the importance is calculated by measuring the difference in the attention weight when a specific neuron in or is deactivated, also enabling parallel computation.

Empirical Validation Through Neuron Deactivation

The paper provides empirical evidence by deactivating language-specific neurons in different layers and observing the impact on performance:

- Understanding Layer: Deactivating language-specific neurons in the understanding layer significantly impairs performance in non-English languages while maintaining English performance. This observation supports the hypothesis that these layers are crucial for processing and translating non-English inputs.

- Task-Solving Layer: Deactivating language-specific neurons in the task-solving layers reduces performance across all languages, including English. This result corroborates the idea that the task-solving process heavily depends on English. Disabling the self-attention structure impairs the ability to solve tasks across all languages, whereas deactivating language-specific neurons within the feed-forward structure predominantly affects non-English languages.

- Generation Layer: Deactivating language-specific neurons in the generation layer affects the model's ability to generate outputs in non-English languages, as expected.

Furthermore, the paper reveals that languages from the same family tend to exhibit a higher degree of overlap in their language-specific neurons. English neurons show limited overlap with other languages, underscoring the predominant role of English-specific neurons within the model.

Fine-tuning Language-Specific Neurons

The paper demonstrates that fine-tuning language-specific neurons with a small number of contextual examples can enhance the multilingual capabilities of LLMs. This targeted fine-tuning results in performance improvements, particularly in multilingual understanding and generation. The results show an average improvement of for high-resource languages and for low-resource languages across all tasks with just $400$ documents.

In summary, LLMs process multilingual inputs by converting them into a unified representation (often English) in the initial layers, leveraging English for task-solving in the intermediate layers, and generating responses in the original language in the final layers. Techniques like PLND enable the identification and manipulation of language-specific neurons, offering insights into the multilingual capabilities of LLMs and enabling targeted fine-tuning for enhanced performance.