Accumulating Data: A Strategy to Prevent Model Collapse in Generative Models

Introduction

The advancement of generative models has introduced a paradigm where models are often trained on a composite of real and synthetic data. This practice raises the question of the potential for model collapse when models are trained iteratively on their own outputs. Model collapse, characterized by the progressive degradation of model performance, poses a significant challenge to the sustainability of model training practices. Recent literature predominantly considers scenarios where new data replace previous iterations' data, neglecting the more realistic setting of data accumulation over time. In this paper, we examine the effects of data accumulation on model collapse by presenting theoretical proofs and empirical findings across different model types and data modalities. Our results establish that unlike the replacement strategy, accumulating data significantly mitigates the risk of model collapse.

Theoretical Foundations: Linear Regression Models

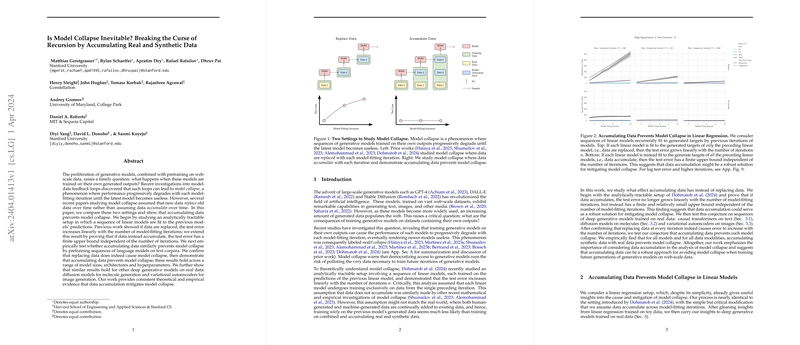

Our exploration begins with a theoretically tractable scenario involving a sequence of linear regression models. Previous studies indicated that model collapse is inevitable when new data replace the old, causing linear growth in test error with each iteration. Contrary to these findings, our theoretical analysis demonstrates that allowing data to accumulate—where each iteration's data contribute to a growing dataset—results in a finite upper bound on the test error, irrespective of iteration count. Specifically, for isotropic features, we show that the test error is bounded above by a factor that is independent of the number of iterations. This pivotal finding suggests that data accumulation can serve as a potent mechanism to curb model collapse.

Empirical Validation Across Model Types

To validate our theoretical insights, we conducted extensive experiments across various generative models and data types:

- LLMs: We trained a cascade of transformer-based LLMs on text data, observing that the replacement of data across iterations precipitated model collapse, as evidenced by increasing test cross-entropy. Conversely, data accumulation not only arrested this decline but in some instances improved model performance.

- Diffusion Models on Molecular Data: Applying our theory to the domain of molecule generation, we observed congruent outcomes with diffusion models, where data accumulation consistently outperformed the replacement strategy in maintaining model efficacy.

- Image Generation with Variational Autoencoders (VAEs): In the field of image generation, training VAEs with accumulated data significantly tempered the escalation of test error, underscoring the universal applicability of our findings.

Implications and Future Directions

The implications of this research are twofold. Practically, our findings endorse the accumulation of data as a strategy to ensure the longevity and reliability of generative models trained on web-scale data, mitigating the risks of model collapse. Theoretically, this work extends our understanding of model-data feedback loops, challenging prior assumptions and spotlighting the resilience imparted by data accumulation strategies.

Looking ahead, this research opens avenues for further exploration into optimized data accumulation strategies, the dynamics of model bias in accumulated datasets, and the extension of these principles to other model architectures and training paradigms. As the boundary between real and synthetic data continues to blur, ensuring the robustness of generative models becomes paramount, with data accumulation emerging as a key strategy in this endeavor.