Overview of "Collapse or Thrive? Perils and Promises of Synthetic Data in a Self-Generating World"

The paper "Collapse or Thrive? Perils and Promises of Synthetic Data in a Self-Generating World" investigates the consequences of training generative machine learning models on large datasets that include synthetic data produced by earlier models. It addresses the critical question of whether future models will suffer from degradation, known as model collapse, or if they will continue to improve.

Key Scenarios Analyzed

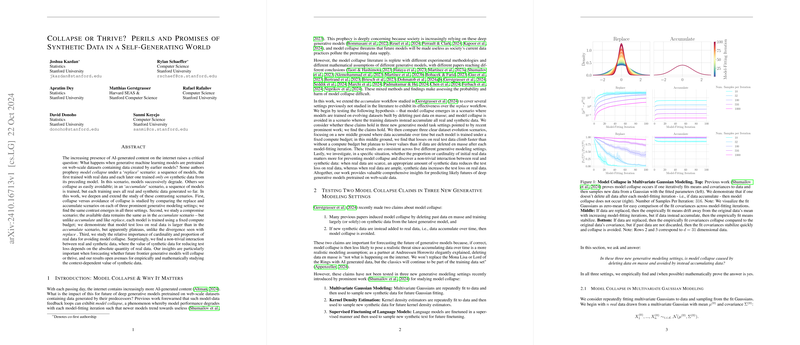

The authors focus on three scenarios: the 'replace,' 'accumulate,' and a compromise scenario termed 'Accumulate-Subsample.' In the 'replace' scenario, models trained exclusively on synthetic data tend to degrade over time. Conversely, the 'accumulate' scenario, where new models are trained on all available real and synthetic data, helps avoid collapse, maintaining model performance across iterations. The 'Accumulate-Subsample' scenario introduces a fixed compute budget, suggesting that while model test loss on real data is higher than 'accumulate,' it stabilizes over time, unlike the divergence observed in 'replace.'

Methodologies and Evidence

The paper examines these scenarios in the context of several generative modeling tasks, including multivariate Gaussian modeling, kernel density estimation (KDE), and supervised fine-tuning of LLMs. In all settings, empirical and mathematical analyses consistently demonstrate that accumulating data prevents collapse, whereas replacing data leads to performance degradation. This evidences a broader phenomenon where the retention of past data stabilizes model outputs, suggesting a practical framework for future dataset construction in training models.

Numerical Findings

The paper provides robust numerical findings. For instance, kernel density estimation shows that model test loss increases when prior data are replaced, but remains stable when data accumulate. The authors also show that synthetic data can improve test loss under the 'accumulate' scenario, highlighting the nuanced role of synthetic data in model training.

Cardinality vs. Proportion of Real Data

An exploration into the cardinality and proportion of real data further reveals the complex interaction between real and synthetic data in preventing model collapse. Preliminary results suggest that both the absolute number and proportion of real data influence outcomes significantly, with synthetic data sometimes improving test loss when real data are scarce.

Theoretical and Practical Implications

The findings have substantial implications. Theoretically, they provide clarity on the dynamics of model-data feedback loops in generative models, challenging prior assumptions about model collapse inevitability. Practically, the insights direct future strategies for dataset construction, particularly emphasizing the retention and accumulation of data to enhance model robustness and accuracy.

Future Directions

The paper proposes several future research directions, such as optimizing the use of synthetic data alongside filtering techniques and developing robust removal methods for detrimental data. These pathways could significantly improve the efficiency and quality of model training and application.

Overall, this paper contributes valuable insights into the dynamics of synthetic data in AI model training, offering a framework to predict and guide the development of future generative models.