Long-text Uncertainty Quantification for LLMs Using Luq

Introduction

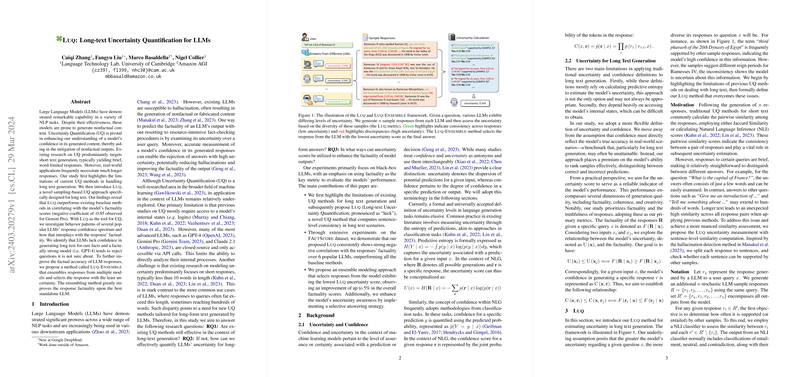

Advancements in LLMs, including prominent models like GPT-4 and Gemini Pro, have significantly impacted various NLP tasks. Despite their capabilities, these models are often prone to generating nonfactual content, a phenomenon known as hallucination. This issue underscores the importance of Uncertainty Quantification (UQ) to assess a model's confidence in its generated outputs and subsequently mitigate the risk of nonfactual generations. However, existing UQ approaches are designed predominantly for short text generation, leaving a noticeable gap in methodologies suited for the long-text generation often required in real-world applications. Addressing this gap, the paper introduces Luq, a novel sampling-based UQ method specifically tailored for evaluating model confidence in long-text generation scenarios.

Background and Motivation

Uncertainty and confidence in machine learning models generally relate to the assurance level associated with a model's prediction. Traditional UQ methods in the context of text generation struggle with long text due to their reliance on model internals' accessibility or the brief nature of the evaluated text. This paper proposes Luq, aiming to accurately quantify uncertainty for long-form text by estimating sentence-level consistency, thus addressing the limitations of existing methods.

The Luq Method

Luq quantifies uncertainty by generating multiple responses to a given query from an LLM and assessing their consistency. A key assumption underpinning Luq is that a higher model uncertainty about a question results in a greater diversity in the generated responses. Using a NLI classifier to evaluate sentence-level entailment among the responses allows for a nuanced assessment of consistency. This approach adapts to long-text scenarios where diversity among extensive responses provides insight into the model's certainty levels. The paper's findings demonstrated that Luq outperformed baseline methods, correlating more strongly with the models' factuality scores, especially for models known to generate longer responses.

Experimental Findings

Experiments conducted across six popular LLMs revealed that Luq consistently outperformed traditional UQ methods by correlating more strongly with models' factuality scores. Moreover, the paper introduced the Luq-Ensemble method, which leverages the uncertainty scores from multiple models to select the response from the model exhibiting the least uncertainty. This approach notably enhanced response factuality, showcasing the utility of Luq beyond mere uncertainty quantification by directly improving the quality of generated content.

Implications and Future Directions

The introduction of Luq adds a significant tool for assessing and improving the reliability of LLM-generated long text. By providing a method to quantify uncertainty that correlates well with factuality, Luq not only aids in identifying less reliable outputs but also fosters enhancements in model design and deployment strategies. Moving forward, further exploration into incorporating uncertainty quantification within model training processes might yield models inherently less prone to hallucinations. Additionally, extending the methodologies to include a broader range of evaluation metrics could offer a more holistic understanding of model outputs beyond factuality alone.

The research undertaken herein marks a step forward in addressing the challenges posed by the long-text generation capabilities of LLMs. By acknowledging and quantifying the inherent uncertainty in model-generated content, Luq paves the way for more accurate, reliable, and factual AI-generated text. Future iterations of this work will likely explore optimizing UQ for a wider array of text generation tasks, potentially leading to the development of LLMs better attuned to the nuances of uncertainty and factuality in their outputs.