Enhancing Long-Video Understanding with Multimodal LLMs

Introduction

The field of video understanding has significantly benefited from the advancements in LLMs and their visual extensions, Vision-LLMs (VLMs). These models have demonstrated exceptional capability in deciphering complex language-tied video understanding tasks by leveraging extensive world knowledge. However, a critical examination of their underlying mechanics reveals an opportunity for further improvement, particularly in handling long-video content spans and integrating video-specific, object-centric information in a more interpretable manner.

Likelihood Selection for Efficient Inference

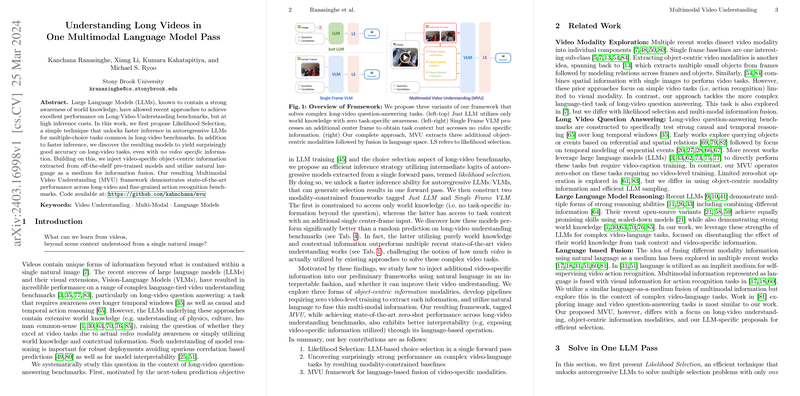

The proposed Likelihood Selection technique marks a pivotal development in utilizing LLMs for multiple-choice and finer-grained video action recognition tasks. This method, focusing on autoregressive LLMs and VLMs, facilitates selection processes through a single forward pass, thereby enabling faster inference without the need for repetitive, iterative generations. Preliminary testing of modality-constrained frameworks (Just LLM and Single Frame VLM) showcases a surprising efficacy, even surpassing several state-of-the-art methods, underscoring how much can be achieved with minimal video-specific data.

Multimodal Video Understanding (MVU) Framework

Building upon the foundational strengths of LLMs in understanding world knowledge and contextual information, the MVU framework introduces a novel method for integrating video-specific, object-centric modalities into the LLM processing pipeline. These modalities—Global Object Information, Object Spatial Location, and Object Motion Trajectory—are extracted using pre-existing models, thereby requiring no additional video-level training. Through natural language-based fusion, these inputs supplement the model's awareness of the video content, significantly enhancing its interpretative capability and performance across various video understanding benchmarks.

Experimental Results

The MVU framework's effectiveness is empirically validated across multiple long-video and fine-grained action recognition benchmarks. Notably, the framework's state-of-the-art zero-shot performance on tasks requiring extensive causal and temporal reasoning emphasizes its potential. Additionally, the experimentation in the robotics domain further attests to the framework's versatility and general applicability beyond conventional video datasets. Modality-constrained evaluations highlight the substantial impact of incorporating specific video information, with notable performance gains observed against approaches reliant on world knowledge alone.

Conclusion and Future Work

This research introduces a transformative approach to long-video understanding that leverages the strengths of LLMs while addressing their limitations in handling extensive video content and specific object-centric information. By pioneering the use of Language-based Fusion of multimodal information, it sets a new benchmark in interpretability and performance for video understanding tasks. Moving forward, this framework opens new avenues for exploring the integration of additional modalities and refining the methods of information extraction and presentation, promising further advancements in video-language AI research.