The paper "FIT-RAG: Black-Box RAG with Factual Information and Token Reduction" addresses significant challenges in the field of knowledge-intensive tasks utilizing LLMs. Fine-tuning LLMs to update long-tail or out-of-date knowledge often proves impractical due to the sheer number of parameters involved. Instead, the authors advocate treating LLMs as black-boxes (i.e., freezing their parameters) and augmenting them with a Retrieval-Augmented Generation (RAG) system, a method known as black-box RAG.

However, existing black-box RAG methodologies face two notable issues:

- Ignorance of Factual Information: Existing systems typically fine-tune the retriever to match LLMs' preferences, which may not always align with factual accuracy, potentially misleading the retriever and diminishing the efficacy of RAG.

- Waste of Tokens: By concatenating all retrieved documents into the input, many unnecessary tokens are included, reducing efficiency.

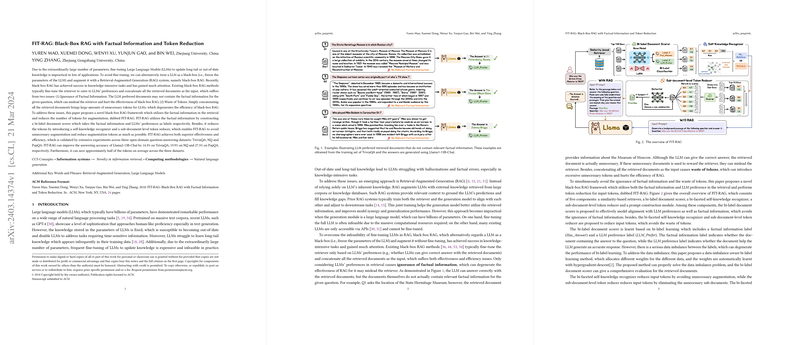

To mitigate these issues, the authors propose a novel black-box RAG framework named FIT-RAG. This framework introduces two main strategies:

- Bi-label Document Scorer: This mechanism utilizes factual information to improve the retrieval process. It ensures that the documents selected for augmentation align with the factual requirements of the given question.

- Self-Knowledge Recognizer and Sub-document-level Token Reducer: This component aims to minimize token waste by intelligently reducing the number of tokens used for augmentation. It recognizes essential information within the retrieved documents and selectively reduces token usage.

The efficacy and efficiency of FIT-RAG are validated through extensive experiments on three open-domain question-answering datasets: TriviaQA, NQ, and PopQA. The results are notable, with FIT-RAG significantly improving the answering accuracy of Llama2-13B-Chat:

- 14.3% increase on TriviaQA

- 19.9% increase on NQ

- 27.5% increase on PopQA

Moreover, FIT-RAG demonstrates substantial improvements in efficiency, reducing token usage by approximately half across the three datasets.

In summary, FIT-RAG represents a significant advancement in black-box RAG systems, offering both enhanced accuracy and efficiency by addressing the fundamental issues of factual information inclusion and token waste reduction.