HyperLLaVA: Dynamic Expert Tuning Framework for Multimodal LLMs

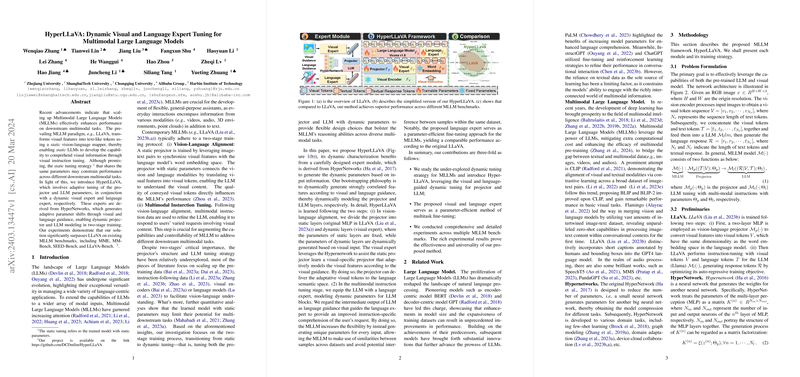

The paper "HyperLLaVA: Dynamic Visual and Language Expert Tuning for Multimodal LLMs" introduces a novel approach to enhance the adaptability and performance of Multimodal LLMs (MLLMs) on various downstream tasks. Unlike existing MLLMs, which often rely on static tuning strategies, HyperLLaVA employs dynamic visual and language expert modules derived from HyperNetworks. This framework adopts a two-stage training protocol, where visual and language-specific guidance drives dynamic parameter adjustments, significantly improving the MLLM's reasoning capabilities across diverse multimodal tasks.

Key Innovations

- Dynamic Tuning Strategy: HyperLLaVA moves away from the prevalent static tuning paradigm by incorporating a dynamic tuning strategy that leverages HyperNetworks to adjust the projector and LLM parameters in real-time. This innovation facilitates a more flexible approach to handling different downstream tasks, where static methods fall short due to parameter rigidity.

- Adaptive Expert Modules: The framework introduces visual and language experts that are engineered to model dynamically generated parameters. The visual expert adapts the projector's output based on specific visual guidance, while the language expert focuses on dynamic tuning of the LLM layers through intermediate outputs, improving multimodal comprehension and adaptive response generation.

- Two-Stage Training Process: The methodology involves vision-language alignment followed by multimodal instruction tuning. In the first stage, HyperLLaVA splits the projector into static and dynamic layers, where dynamic layers use HyperNetworks for parameter generation guided by visual inputs. The second stage equips the LLM with a language expert module to enhance instruction-specific comprehension.

Experimental Results

The paper provides a comprehensive evaluation of HyperLLaVA across multiple benchmarks, demonstrating its effectiveness:

- On 12 widely-recognized benchmarks, HyperLLaVA consistently outperforms prior state-of-the-art methods, including its predecessor LLaVA and other considerable MLLMs like Qwen-VL and IDEFICS-80B, even though they possess significantly more parameters.

- Ablation studies underscore the significance of each component in the HyperLLaVA framework, with both visual and language experts contributing significantly to performance gains.

Implications and Future Prospects

HyperLLaVA establishes a robust foundation for future multimodal AI systems by introducing adaptable expert modules that can be fine-tuned efficiently for various multimodal tasks. Practically, this allows researchers and developers to tailor MLLMs dynamically in response to specific task requirements without incurring high computational costs typically associated with large-scale static model retraining.

Theoretically, this work presents promising avenues for further exploration in dynamic model architectures and parameter generation tailored to multimodal challenges. The deployment of HyperNetworks to generate input-conditioned dynamic parameters could be expanded to other domains requiring adaptive responses to heterogeneous data inputs.

In conclusion, HyperLLaVA sets a precedent in the domain of MLLMs by demonstrating the profound impact of dynamic tuning strategies on model performance, opening up new pathways for more efficient and powerful multimodal language comprehension systems. Future research could explore scalability and extension of this adaptive methodology across broader applications to further enhance the integration of visual and textual information processing.