Honeybee: Locality-enhanced Projector for Multimodal LLM

The paper "Honeybee: Locality-enhanced Projector for Multimodal LLM" addresses a critical yet underexplored component in Multimodal LLMs (MLLMs)—the visual projector. A visual projector is vital for translating visual features from a vision encoder into a format that an LLM can understand. This paper identifies two key properties essential for an effective visual projector: flexibility in the number of visual tokens and the ability to preserve the local context. Based on these insights, the authors propose novel locally-enhanced projector designs, namely "Honeybee," which incorporates convolution and deformable attention mechanisms to satisfy these properties.

Key Contributions and Findings

- Identification of Essential Projector Properties:

The paper begins by identifying two essential properties for the effectiveness of visual projectors: - Flexibility in determining the number of visual tokens, which is crucial for the computational efficiency of MLLMs. - Preservation of local context from visual features, which aids in better spatial understanding.

- Proposal of Locality-enhanced Projectors:

The authors introduce two novel projector designs: - C-Abstractor: Utilizes convolution operations to maintain local context. - D-Abstractor: Employs deformable attention to dynamically adjust its focus, preserving local contexts comprehensively.

These designs aim to balance the trade-off between maintaining spatial details and ensuring computational efficiency.

- Extensive Evaluation and Benchmarking:

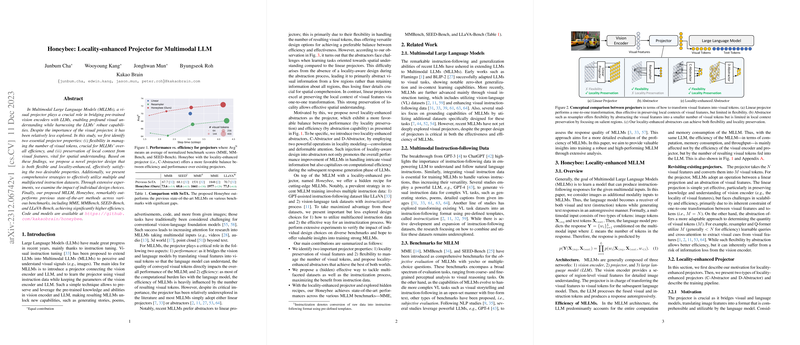

Honeybee's performance was rigorously evaluated against various benchmarks, including MME, MMBench, SEED-Bench, and LLaVA-Bench. - Honeybee showcased superior performance across these benchmarks compared to state-of-the-art methods. - Notably, Honeybee outperformed previous methods significantly in tasks requiring fine-grained spatial understanding, attributed to its locality-enhanced projector.

- Comprehensive Training and Instruction Tuning: A pivotal part of the paper is the examination of training methods using multifaceted instruction datasets. The authors present strategies to effectively harness diverse visual instruction data, crucial for improving the robustness and capabilities of MLLMs.

- Hidden Recipe for Effective Training: Delving into the specifics, the paper outlines several subtle design choices, including dataset balancing, template granularity and diversity, and the utility of multi-turn examples with de-duplication. These aspects collectively contribute to the efficient training of MLLMs.

Numerical Results

The Honeybee models exhibit significant improvements in several benchmarks: - On MMBench, Honeybee-7B with C-Abstractor achieved an accuracy of 70.1, outperforming previous models like LLaVA-1.5. - Honeybee-13B with C-Abstractor achieved 77.5 in , and N=1730, which are notable jumps in performance over existing models.

Practical and Theoretical Implications

The introduction of locality-enhanced projectors presents substantial implications for the development of MLLMs: - Efficiency Gains: The flexibility in managing visual tokens translates directly to improved computational efficiency, allowing larger models to be deployed in resource-constrained environments. - Improved Understanding: Enhanced local context preservation significantly improves the model's capability to understand and reason about spatial relationships in visual data, pushing the envelope in tasks like visual question answering and scene understanding.

Speculations on Future Developments

Looking forward, the methods and insights from this paper can catalyze several future developments: - Advanced Projector Designs: Employing more sophisticated architectures or hybrid methods combining convolution and deformable attention components could further push the boundaries of visual comprehension in MLLMs. - Unified Multimodal Understanding: Integrating these projectors into a broader array of modalities (e.g., video, 3D data) could lead to more comprehensive and versatile models. - Efficiency in Deployment: The focus on efficiency might inspire more practical MLLM deployments in real-world applications, from autonomous driving to augmented reality systems.

In conclusion, the Honeybee project offers a significant leap in the design and utilization of visual projectors in MLLMs. By meticulously balancing flexibility and locality, it sets a new standard in multimodal understanding while ensuring computational feasibility. The comprehensive strategies for utilizing and instructing datasets further solidify its contributions, offering a potent recipe for training future multimodal systems.