VideoAgent: A Novel Agent-Based Approach for Long-Form Video Understanding

Introduction

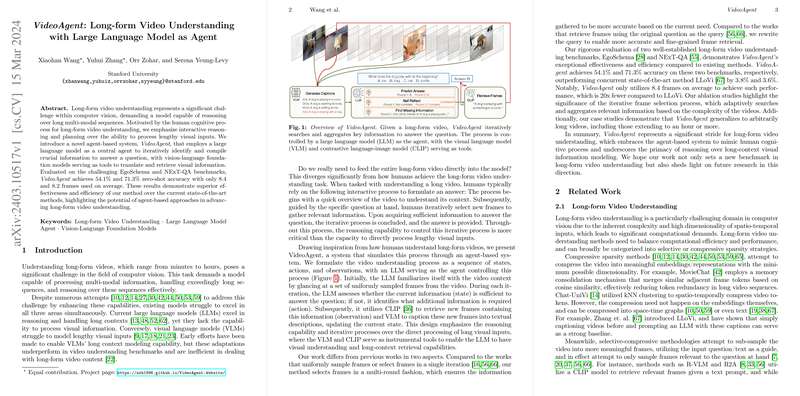

VideoAgent introduces a paradigm shift in the approach towards long-form video understanding, employing a LLM as the central agent within an agent-based system. This system leverages vision-language foundation models as tools, enabling the LLM to interactively reason and plan to identify crucial information required to answer questions about videos. This model is evidenced by its superior performance on the EgoSchema and NExT-QA benchmarks, achieving significant efficiency in processing and accuracy in outcomes.

Approach

The core innovation of VideoAgent lies in its method, predicated on the insight that understanding long-form videos mimics an interactive, iterative reasoning process rather than processing vast streams of visual information in bulk. This method is realized through a sequence of states, actions, and observations, with the LLM acting as the agent to direct this process.

The approach involves:

- Initial State Learning: The system starts by getting a broad overview of the video through several uniformly sampled frames which are then described using vision-LLMs (VLMs).

- Action Decision: Based on the current state, the LLM decides whether it has enough information to answer the question or if it needs to search for more information.

- Observation Through Iteration: If more information is needed, the system identifies specifics about the desired information and utilizes tools like CLIP to retrieve new frames relevant to the inquiry.

- State Update: New frames are described, and the information is added to the current state, looping back to the action decision stage if necessary.

This iterative, agent-based approach ensures an efficient and targeted search for information, starkly reducing the amount of visual data processed while maintaining or even improving the quality of comprehension.

Empirical Evaluation

Evaluated on the challenging benchmarks of EgoSchema and NExT-QA, VideoAgent demonstrates its effectiveness. It achieves 54.1\% and 71.3\% zero-shot accuracy on these benchmarks respectively, notably outperforming state-of-the-art methods and drastically reducing the number of frames processed to an average of just over 8 per video. These results not only attest to the method's efficiency but also its scalability to more extensive videos without compromising performance.

Implications and Speculations

The methodology adopted by VideoAgent illuminates a path forward for long-form video understanding, emphasizing the importance of reasoning and interactivity over brute-force processing. The notable efficiency gains suggest potential applications in areas where computational resources are limited, or rapid video analysis is required. Looking ahead, the integration of more sophisticated reasoning capabilities and further optimization of the iterative process could unlock even higher levels of understanding and applications in more complex video analysis tasks.

Moreover, the framework presented by VideoAgent has implications beyond video understanding, proposing a generalizable approach for tackling problems that involve large, complex input spaces. Future developments could explore the applicability of such agent-based models in broader contexts, including real-time surveillance, interactive media analysis, and automated content generation.

Conclusions

VideoAgent represents a significant advancement in the field of computer vision, particularly in understanding long-form video content. By leveraging the cognitive-like processing capabilities of LLMs in an iterative, agent-based framework, VideoAgent not only sets a new standard for efficiency and accuracy in this area but also opens the door to novel approaches in AI and machine learning research and applications.