Hierarchical Acoustic Modeling for Enhanced Text-to-Speech Synthesis

Introduction

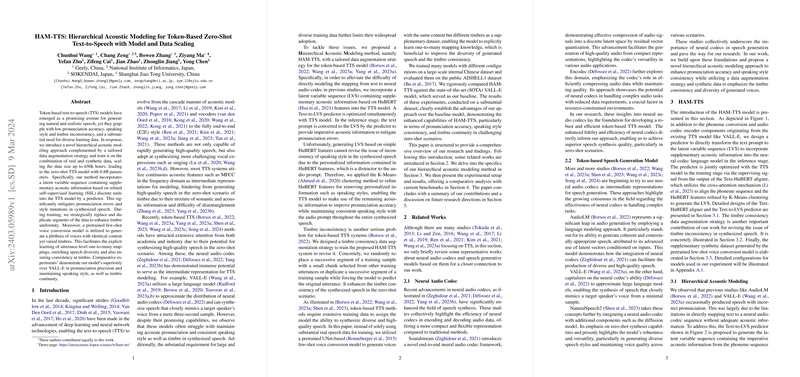

The quest to enhance the quality and realism of synthesized speech has led to numerous advancements in text-to-speech (TTS) technologies. Among these, token-based TTS models hold significant promise for producing high-quality, natural speech. However, challenges such as low pronunciation accuracy, inconsistencies in speaking style and timbre, and the demand for extensive and diverse training data persist. Addressing these, we explore a novel approach titled Hierarchical Acoustic Modeling for Token-Based Zero-Shot Text-to-Speech with Model and Data Scaling (HAM-TTS). This method introduces a refined framework and data augmentation strategy, scaled up to 650k hours of combined real and synthetic data, significantly improving pronunciation accuracy and maintaining style and timbre consistency.

Hierarchical Acoustic Modeling in HAM-TTS

The hierarchical acoustic modeling (HAM) method at the core of HAM-TTS integrates a Latent Variable Sequence (LVS) containing supplementary acoustic information derived from refined self-supervised learning discrete units. This integration is accomplished by employing a predictor within the TTS model. The primary advancements brought forth by HAM include:

- Pronunciation Accuracy: The incorporation of LVS significantly diminishes pronunciation errors by providing crucial acoustic cues.

- Speaking Style Consistency: The use of K-Means clustering to refine HuBERT features removes personalized information, ensuring the speaking style is consistent with the audio prompt.

- Timbre Consistency: The novel data augmentation strategy developed for HAM-TTS aids in enhancing the uniformity of timbre across synthesized speech.

Training with Real and Synthetic Data

An innovative aspect of HAM-TTS is its approach to training with a blend of real and synthetic data. This combination not only enriches the diversity of speech samples but also improves the model’s ability to maintain timbre consistency and style. Utilizing a pretrained few-shot voice conversion model facilitates the creation of a plethora of voices with identical content but varied timbres, thereby providing the model with a richer diversity of training samples.

Experimental Evaluation

Extensive comparative experiments were conducted to evaluate the performance of HAM-TTS against VALL-E, a state-of-the-art baseline model. Notably, HAM-TTS demonstrated superior pronunciation precision and maintained speaking style as well as timbre continuity in various zero-shot scenarios. The results underscore the efficacy of hierarchical acoustic modeling, refined feature processing through K-Means clustering, and the strategic use of synthetic data in enhancing TTS synthesis quality.

Conclusion and Future Developments

The introduction of HAM-TTS and its hierarchical acoustic modeling approach marks a significant step forward in the field of text-to-speech synthesis. The successful integration of supplementary acoustic information via LVS and the advanced data augmentation strategies significantly reduce pronunciation errors while ensuring consistent speaking style and timbre quality. Looking ahead, future research could explore the optimal amalgamation of synthetic data in relation to speaker diversity and speech duration per speaker. Additionally, enhancing the model’s inference speed could further bolster its applicability in real-time scenarios.

Ethical Considerations and Limitations

While HAM-TTS advances the capabilities of text-to-speech systems, it also necessitates consideration of ethical implications, particularly in terms of potential misuse and privacy concerns. The generation of synthetic training data, though innovative, introduces considerations about the authenticity and consent in voice mimicking. Moreover, the scalability and practical applications of HAM-TTS call for ongoing assessment to mitigate potential biases and promote equitable technology development.

In summary, HAM-TTS embodies a robust methodology for text-to-speech synthesis, fostering advancements that could transform interactive technologies and digital communication platforms.