StyleTTS: Enhancing Text-to-Speech with Style-Based Generative Models

Introduction

The field of Text-to-Speech (TTS) synthesis has witnessed considerable advancements, yet aligning speech synthesis with human-like variability in prosody, emotion, and style remains challenging. The paper "StyleTTS: A Style-Based Generative Model for Natural and Diverse Text-to-Speech Synthesis" introduces an innovative approach to address these limitations. The proposed model, StyleTTS, leverages a novel style-based generative framework incorporating self-supervised learning and advanced alignment mechanisms to enhance speech naturalness and diversity.

Model Design and Components

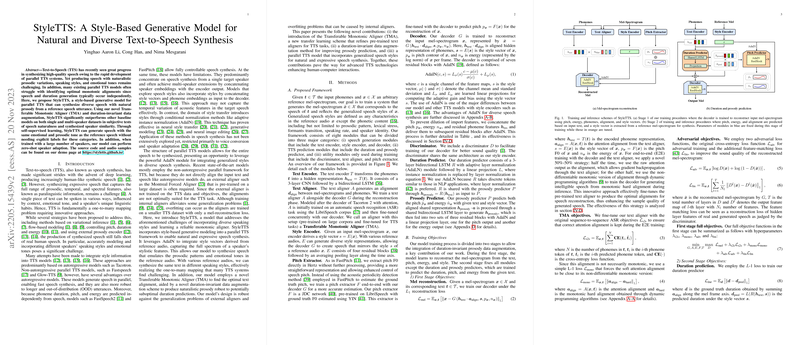

StyleTTS is distinguished by its use of a Transferable Monotonic Aligner (TMA) and an adaptive instance normalization (AdaIN) mechanism for style integration:

- Transferable Monotonic Aligner (TMA): This component refines pre-trained aligners for optimal text-to-speech alignment, ensuring robust speech synthesis parallel to the input text.

- Duration-Invariant Data Augmentation: A novel technique that maintains prosodic consistency despite variable durations, enhancing the model's generalization to unforeseen durations.

- AdaIN for Style Integration: StyleTTS applies AdaIN to synthesize speech with styles derived from reference audio, allowing the model to emulate the prosodic patterns and emotional tones present in these references.

These components culminate in a model adept at generating human-like, expressive speech with diverse styles and robust zero-shot speaker adaptation capabilities.

Experimental Validation

The model's efficacy is substantiated through comprehensive evaluations:

- Subjective Evaluations: In terms of naturalness and speaker similarity, StyleTTS surpasses baseline models, including FastSpeech 2 and VITS, across both single and multi-speaker setups. It demonstrates notably high mean opinion scores (MOS), illustrating its ability to produce more natural-sounding speech.

- Objective Metrics: The model exhibits strong correlations with reference audio in various acoustic features and demonstrates improved robustness to text length variations compared to competitors.

Implications and Future Directions

StyleTTS sets a precedent for integrating style in TTS systems, bridging gaps between speech synthesis and style transfer. The robustness and adaptability of StyleTTS, combined with its capability for zero-shot speaker adaptation, open avenues for practical applications like personalized digital assistants, voiceovers in media, and more sophisticated human-computer interaction systems.

Future research could expand StyleTTS's framework to broader linguistic contexts and explore its potential in multilingual TTS applications. Further exploration into the model's ability to adapt to extreme speech variations and emotions without explicit data labels would also be beneficial. As AI and TTS technologies evolve, StyleTTS positions itself as a foundational model fostering advancements in expressive, style-rich speech synthesis.