Enhancing Mathematical Capabilities of 7B LLMs with Synthetic Data Scaling

Introduction

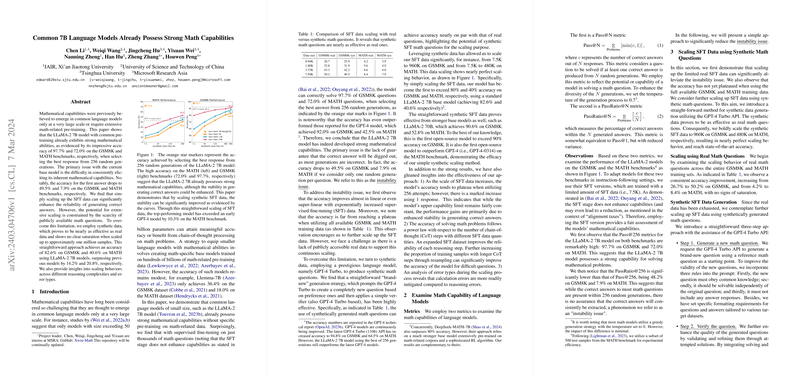

Emergent capabilities in LLMs, particularly concerning mathematical reasoning, have traditionally been associated with large-scale models exceeding tens of billions of parameters. Recent studies suggested that meaningful performance on mathematical benchmarks could only be achieved with such gargantuan models or those specifically trained on extensive mathematical corpora. However, this paper challenges that notion by demonstrating the inherent mathematical capabilities of a comparatively smaller 7B parameter model, LLaMA-2 7B, without resorting to math-centric pre-training. The paper’s critical insight revolves around the concept that the fundamental issue with existing models is not the lack of capability but the instability in consistently generating correct solutions. The authors propose a solution leveraging synthetic data, showing that it remarkably enhances performance on two major mathematical benchmarks: GSM8K and MATH.

Understanding Mathematical Capabilities in LLaMA-2 7B

The authors' exploration begins with an analysis of the LLaMA-2 7B model's performance on the GSM8K and MATH benchmarks. They employ two metrics for evaluation: Pass@N and PassRatio@N. These metrics reveal an intriguing aspect of the model's behavior; while exhibiting high potential capabilities (Pass@256), the model's inconsistency in producing correct answers on the first attempt (PassRatio@256) indicates an instability issue. Remarkably, when allowed to choose the best answer from 256 trials, the model's accuracy surpasses that of its contemporaries on GSM8K and showcases competitive performance on MATH.

Synthetic Data Scaling to Mitigate Instability

The paper posits that the instability issue can be significantly mitigated by scaling supervised fine-tuning (SFT) data. This assertion is grounded in observations that increasing SFT data leads to linear, or super-linear, improvements in accuracy without saturation. Given the limitation of accessible real math questions for further scaling, the authors turn to synthetic question generation as a solution, harnessing the GPT-4 Turbo model. This approach not only circumvents the scarcity of real questions but also proves nearly as effective, indicating the synthetic data's high quality and relevance.

The authors conduct extensive experiments, scaling SFT data up to approximately one million samples. These experiments illustrate that such scaling directly correlates with marked improvements in the model’s performance, achieving state-of-the-art accuracy on the GSM8K and MATH benchmarks with a 7B model. This outcome firmly establishes that the so-called instability issue can be substantially reduced through the strategic scaling of SFT data.

Implications and Future Directions

This paper's implications extend beyond just improving mathematical abilities in LLMs. It provides a compelling argument against the necessity for extremely large models or specifically pre-trained models to achieve high performance in domain-specific tasks. Instead, it showcases the potential of leveraging synthetic data to uncover and enhance the capabilities of existing models.

Looking forward, the synthetic SFT data scaling approach opens new avenues for research and development across various domains, encouraging a reevaluation of how we perceive and unlock the potential of LLMs. With synthetic data proving to be a valuable resource for model training, future work might explore its application in other specialized areas beyond mathematics, promising further breakthroughs in AI research and applications.

In conclusion, this paper’s exploration into enhancing the mathematical capabilities of the LLaMA-2 7B model via synthetic data scaling not only challenges existing beliefs about model training and capabilities but also sets a precedent for future research in leveraging synthetic data to maximize the potential of LLMs across diverse domains.