Exploring the Legal Frontier: Insights from the \ourmodel{} LLM

Introduction

The landscape of artificial intelligence and LLMing has seen significant advancements, yet the legal domain has often remained on the periphery of these technological leaps. Addressing this gap, the recently developed \ourmodel{} emerges as a pioneering effort to tailor LLMs to the intricacies of legal text comprehension and generation. This novel initiative not only focuses on enhancing legal document processing but also aims to contribute to the broader application of LLMs within the field of law.

The \ourmodel{} Family and Its Innovations

A Tailored Approach to Legal Language

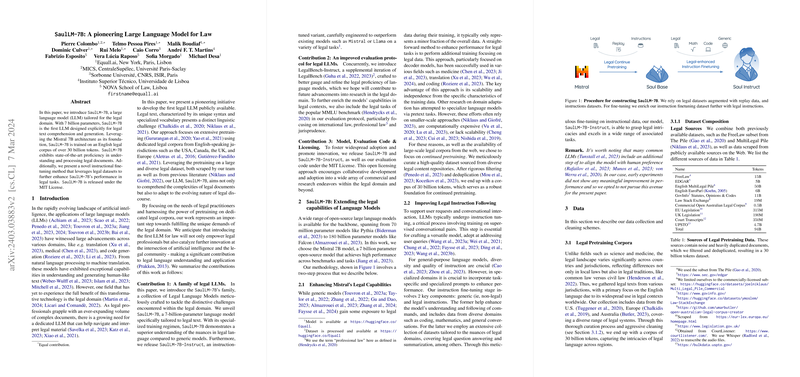

At its core, \ourmodel{} is built upon the Mistral 7B architecture and is extensively pre-trained on a vast corpus of over 30 billion tokens, specifically curated from the legal domain. This dedicated approach ensures that \ourmodel{} can navigate the nuanced terrain of legal jargon and syntax more effectively than its generalist counterparts. Furthermore, the introduction of \ourmodelift{}, an instruction-tuned variant of \ourmodel{}, signifies a leap towards improved operational performance on legal tasks by incorporating both generic and customized legal instructions during its fine-tuning phase.

Enhanced Evaluation Protocols

The paper also introduces \legalbench{}, a refined benchmark that promises to offer more nuanced insights into the legal proficiency of LLMs. This improved benchmark, supplemented by tasks from the popular MMLU, sets a new standard for evaluating the capabilities of legal LLMs, encouraging more focused advancements in the domain.

Diving Deeper: Training and Data Considerations

The Training Journey

The development of \ourmodel{} involved a rigorously structured two-step training process. Initially, a substantial pre-training phase grounded the model in the domain of legal text, utilizing a diverse array of legal documents from multiple jurisdictions. Subsequently, the model underwent an instructional fine-tuning phase, which not only reinforced its ability to follow domain-specific instructions but also honed its comprehension skills through an eclectic mix of general and legal-specific training data.

Data Collection and Curation

A cornerstone of \ourmodel{}'s development was the meticulous assembly of its training corpus. Drawing from an array of legal texts and documents, the team embarked on an extensive data collection and cleansing operation. The final dataset, encompassing 30 billion tokens, was derived from both publicly accessible legal information and strategically selected sources, ensuring a wide representation of legal knowledge and contexts.

The Implications and Prospects of \ourmodel{}

Practical and Theoretical Contributions

From a practical standpoint, \ourmodel{} and \ourmodelift{} stand to significantly benefit legal professionals by providing a tool adept in handling the complexity of legal documents. Theoretical contributions, on the other hand, stem from the strides made in adapting LLMs to domain-specific requirements, thereby expanding our understanding of LLM training and application.

Future Horizons

The release of \ourmodel{} under an open license not only promotes widespread usage and experimentation but also invites further research and development within the legal AI domain. As this field continues to evolve, future endeavors might explore improved instructional fine-tuning methods, the integration of multi-jurisdictional legal systems, and the application of legal LLMs in predictive and analytical tasks within the legal profession.

Conclusion

In essence, \ourmodel{} represents a significant stride towards realizing the potential of LLMs within the legal sector. By adeptly navigating the intricacies of legal language and providing a robust tool for document analysis, this model paves the way for a new era of legal research and practice, empowered by advanced AI capabilities. The open-source nature of \ourmodel{} further underscores the collaborative spirit of this endeavor, encouraging continued innovation and exploration at the intersection of law and artificial intelligence.