Overview of LawBench: Benchmarking Legal Knowledge of LLMs

The paper presents LawBench, a comprehensive evaluation benchmark designed to assess the legal capabilities of LLMs within the Chinese civil-law system. This benchmark addresses the crucial aspect of evaluating domain-specific knowledge, particularly in the legal field, which demands a detailed understanding of specialized texts and concepts. The paper underscores the importance of such benchmarks in understanding the potential and limitations of LLMs in executing legal-oriented tasks.

Key Features of LawBench

LawBench evaluates LLMs across three cognitive dimensions inspired by Bloom's Taxonomy:

- Legal Knowledge Memorization: This dimension assesses the ability of LLMs to memorize and recall legal concepts, facts, and articles.

- Legal Knowledge Understanding: This dimension measures the comprehension capabilities of LLMs, focusing on their ability to understand legal texts, entities, and relationships.

- Legal Knowledge Applying: This dimension evaluates the ability to apply legal knowledge in realistic, problem-solving scenarios, necessitating reasoning and analytical skills.

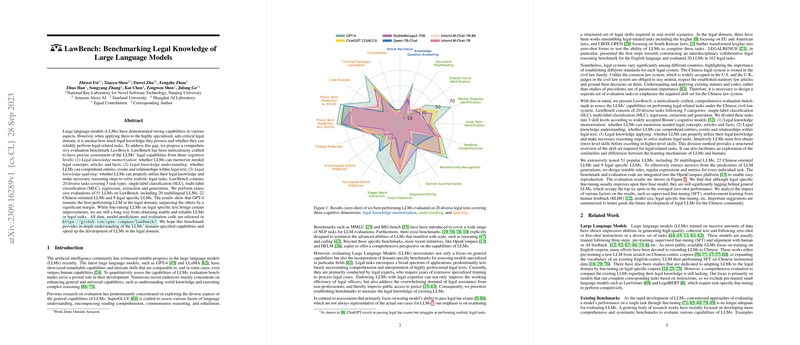

The benchmark encompasses 20 tasks spreading across these cognitive dimensions, categorized into five types of tasks: single-label classification, multi-label classification, regression, extraction, and generation. LawBench features tasks such as article recitation, dispute focus identification, named entity recognition, case analysis, and consultation among others.

Comprehensive Evaluation of LLMs

The paper evaluates 51 different LLMs, distributed across multilingual models, Chinese-oriented models, and legal-specific models, covering a wide range of architectures and sizes. Notably, GPT-4 outperforms other LLMs, reflecting its superior capability in the legal domain. However, while fine-tuning LLMs on legal-specific corpora demonstrates some improvement, the results indicate a considerable gap before LLMs can reliably handle legal tasks in practice.

Significant Findings and Analysis

- Model Performance: GPT-4 leads significantly, especially in handling complex legal tasks. The analysis shows that larger models generally perform better, particularly in the one-shot setting.

- Fine-tuning Implications: The improvement from legal specific fine-tuning is evident, though it remains insufficient compared to general-purpose performance observed in models like GPT-4.

- Retrieval-Augmentation Challenges: The models struggle to utilize retrieval-augmented data effectively, indicating an area for further research in making retrieval mechanisms more efficient within LLMs.

- Rule-based and Soft Metric Evaluation: Given legal tasks often have intricate answer extraction needs, soft metric evaluation like soft-F1 for extraction tasks indicates the paper's attention to nuanced evaluation methods, especially where LLM generation diverges linguistically from ground-truth labels.

Implications and Future Directions

The introduction of LawBench provides a structured methodology to evaluate LLMs in legal domains, especially those rooted in the civil law system prevalent in China. The paper implies a need for collaborative efforts to develop high-quality, reliable legal AI systems that are not only proficient in English law but are adaptable to various legal systems globally.

Furthermore, the results suggest future work might explore enhanced pre-training strategies, better alignment of legal data within LLMs, and improved retrieval-augmented approaches that help bridge the gap between human legal expertise and machine learning capabilities.

In essence, this research marks an important advance in quantifying and qualifying the legal domain capabilities of current LLMs, setting a foundation for future explorations into domain-specific AI applications.