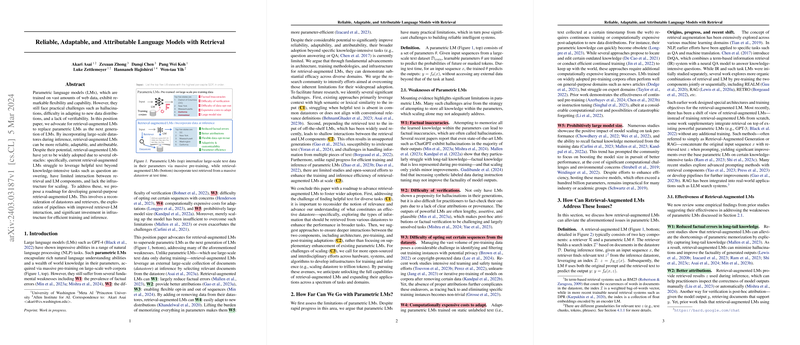

This paper (Asai et al., 5 Mar 2024 ) advocates for Retrieval-Augmented LLMs (RALMs) as the successor to purely parametric LLMs (LMs), arguing that RALMs offer significant advantages in reliability, adaptability, and attributability, which are key challenges for modern LMs like GPT-4.

The authors begin by outlining the fundamental weaknesses of parametric LMs:

- W1: Factual inaccuracies (Hallucinations): Parametric LMs struggle to store all knowledge, especially long-tail facts, leading to errors.

- W2: Difficulty of verifications: Outputs lack clear attributions, making fact-checking difficult.

- W3: Difficulty of opting out data: Removing specific data (due to privacy or copyright) from the training corpus is hard, and tracing output provenance is complex.

- W4: Computationally expensive adaptation: Updating parametric LMs to new data or domains requires costly re-training or fine-tuning.

- W5: Prohibitively large model size: While scaling improves performance, it leads to immense computational costs and resource requirements.

Retrieval-augmented LMs, in contrast, maintain an external datastore and retrieve relevant information during inference to condition their output. This approach is presented as a way to mitigate the weaknesses of parametric LMs:

- W1: Reduced factual errors: By accessing external knowledge, RALMs can explicitly retrieve and incorporate long-tail facts, reducing hallucinations.

- W2: Better attributions: The retrieved documents can be provided as sources for the generated text, improving verifiability.

- W3: Flexible opt-in/out: Managing the datastore is simpler than managing a massive training corpus, allowing easier control over included information.

- W4: Adaptability & customizability: Changing or updating the datastore allows easy adaptation to new domains or real-time knowledge without costly LM retraining.

- W5: Parameter efficiency: RALMs can potentially achieve similar or better performance with smaller LM parameters by leveraging external knowledge, reducing the burden of memorization.

Despite their potential, the paper notes that RALMs haven't achieved widespread adoption beyond specific knowledge-intensive tasks like Question Answering. The authors identify three main obstacles:

- C1: Limitations of retrievers and datastores: Current retrieval often relies on semantic or lexical similarity, which may not find helpful text for diverse tasks (e.g., reasoning) where different types of relationships are needed. Over-reliance on general datastores like Wikipedia also limits effectiveness in specialized domains.

- C2: Limited interactions between retrievers and LMs: Simple approaches like prepending retrieved text to the input (common in RAG) lead to shallow interactions. This can result in unsupported generations, susceptibility to irrelevant context, and difficulties in integrating information from multiple documents. Input augmentation also increases context length, raising inference costs.

- C3: Lack of infrastructure for scaling: Unlike parametric LMs, training and inference for RALMs, especially with massive datastores, lack standardized, efficient infrastructure. Updating large indices during training is computationally expensive, and large-scale nearest neighbor search for inference requires significant resources.

To address these challenges and promote the broader adoption of RALMs, the paper proposes a roadmap:

- Rethinking Retrieval and the Datastore (Addressing C1):

- Beyond semantic and lexical similarity: Develop new definitions of "relevance" and retrieval methods that can find helpful text for diverse tasks beyond just factual knowledge, potentially by incorporating contextual information or task-specific signals (e.g., instruction-tuned retrievers).

- Reconsidering and improving the datastore: Explore strategies for curating, filtering, and composing datastores beyond general corpora like Wikipedia. This includes balancing multiple domains and ensuring data quality.

- Enhancing Retriever-LM Interactions (Addressing C2):

- New architectures beyond input augmentation: Investigate more integrated architectures like intermediate fusion (e.g., RETRO) or output interpolation (e.g., kNN LM, NPM) that allow deeper interaction between the retriever and LM components throughout generation. This may require significant pre-training efforts.

- Incorporating retrieval during LM pre-training: Train LMs with retrieval from the outset to make them better at leveraging retrieved context, potentially with minimal architectural changes.

- Further adaptation after pre-training: Explore post-pre-training adaptation methods like instruction tuning or RLHF specifically designed for RALMs to improve their ability to utilize retrieved information and avoid unsupported generations. Techniques to filter or adaptively use retrieved context are also promising.

- Efficient end-to-end training: Develop training strategies that jointly optimize both the retriever and the LM, potentially without direct supervision on retrieved documents, to improve overall pipeline performance and reduce retrieval errors.

- Building Better Systems and Infrastructures for Scaling and Adaptation (Addressing C3):

- Scalable search for massive-scale datastores: Invest in research and development for efficient nearest neighbor search algorithms, data compression (e.g., binary embeddings), quantization, and data loading techniques for datastores scaled to trillions of tokens. This requires interdisciplinary efforts involving systems and hardware design.

- Standardization and open-source developments: Develop standardized libraries, frameworks (beyond basic RAG interfaces like LangChain or LlamaIndex), and evaluation benchmarks specifically for RALMs to facilitate research, implementation, and adoption across different architectures and training configurations.

In summary, the paper posits that RALMs represent a promising path forward for more robust and flexible LLMs. However, realizing this potential requires fundamental advancements in defining and finding relevant information, designing architectures that enable deep interactions between retrieval and generation, and building scalable infrastructure for training and inference with massive datastores. These challenges necessitate collaborative, interdisciplinary research efforts.