Consistency LLMs (CLLMs): Enhancing Efficiency in LLM Inference

The paper introduces Consistency LLMs (CLLMs), aimed at optimizing the inference speed of LLMs through an innovative approach called Jacobi decoding. While traditional autoregressive (AR) decoding has been the standard for LLMs, its sequential nature often leads to high latency, particularly when generating long responses. Jacobi decoding, in contrast, offers a parallelizable alternative that has the potential to significantly reduce inference time.

Understanding the Bottleneck in Existing Parallel Decoding

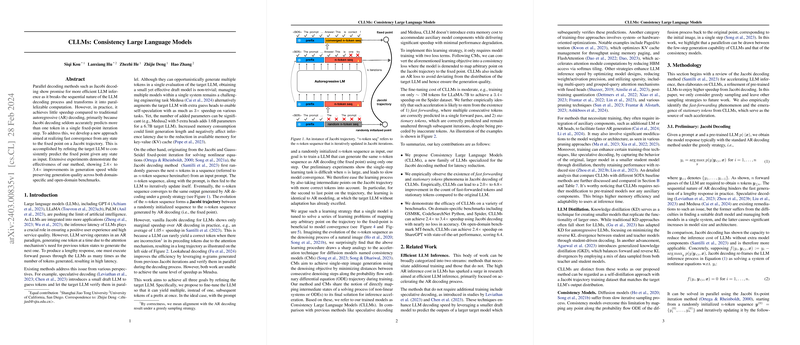

Jacobi decoding involves processing token sequences in parallel, predicting an entire sequence at once rather than updating one token at a time. Despite this theoretical efficiency, practical speedups have been limited due to LLMs' inability to accurately predict multiple tokens in a single iteration due to dependencies created by the attention mechanisms.

The Innovation of CLLMs

The authors propose refining the LLMs to consistently predict the fixed point of a Jacobi trajectory from any input state. This approach modifies the model to predict multiple correct tokens at once, enhancing the efficacy of Jacobi decoding.

- Training Methodology: CLLMs are trained using a dataset generated from Jacobi trajectories exhibiting states ranging from initial guesses to fixed points. Two types of consistency losses are employed:

- Global Consistency Loss: Directly maps any point in the Jacobi trajectory to its fixed point.

- Local Consistency Loss: Ensures that consecutive points in the trajectory map to each other, implicitly guiding the sequence towards the fixed point.

- Empirical Results: The experimental results are substantial, reporting improvements in generation speed by factors of 2.4 to 3.4 across various benchmarks, all while retaining generation quality.

Implications and Observations

The acceleration stems from two phenomena identified in CLLMs:

- Fast Forwarding: The ability to predict several correct subsequent tokens in one step.

- Stationary Tokens: Correctly predicted tokens that remain fixed despite incorrect preceding tokens.

These capabilities imply that CLLMs have learned implicit linguistic structures or collocations that are predictable in groups rather than individually. This discovery might not only expedite inference but also provide insights into more efficient model training and design.

Comparative Analysis

CLLMs present an advantageous alternative to existing methods such as speculative decoding and certain architectural augmentations. They do not necessitate additional model components or significant architectural changes, thus maintaining memory efficiency and ease of integration into existing systems.

Future Directions

This research proposal can potentially influence both theoretical and practical domains:

- Theoretical: Enhancing understanding of parallel token prediction and implicit language structures within LLMs.

- Practical: Enabling faster LLM inference could transform real-time applications across industries where latency is critical.

In conclusion, CLLMs represent a significant step towards more efficient LLM deployment. Their ability to accelerate inference without compromising on quality is particularly promising for large-scale applications. Future work could explore extending these methods to various LLM architectures and further refining the training process to accommodate diverse linguistic patterns.