Introduction

Transformers have significantly advanced the state of Machine Translation (MT), but their autoregressive nature during inference - where each token prediction depends on previously generated tokens - limits their computational efficiency, particularly in latency-sensitive environments. Previous approaches to mitigate this issue involve specific network architectures or learning-based methods, which tend to trade off translation quality for inference speed. This paper introduces a novel approach that circumvents the need for such trade-offs.

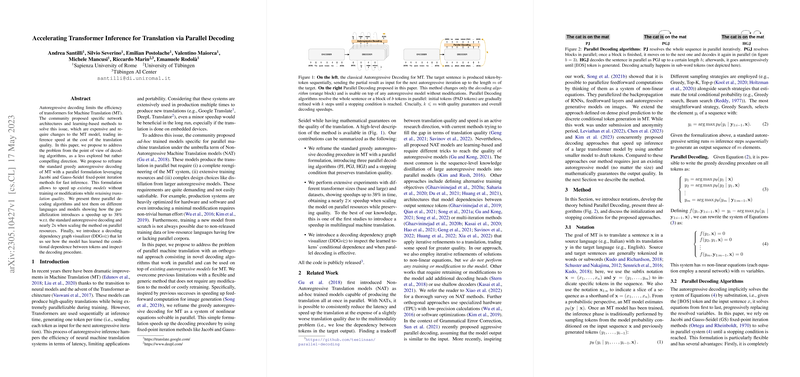

Parallel Decoding Strategies

The core contribution of this work is the reframing of standard greedy autoregressive decoding with parallel formulations, drawing on classic fixed-point iteration methods – Jacobi and Gauss-Seidel. The authors propose three parallel decoding algorithms termed Parallel Jacobi (PJ), Parallel Gauss-Seidel Jacobi (PGJ), and Hybrid Gauss-Seidel Jacobi (HGJ), which differ in how they handle blocking and sequence generation. Crucially, they offer speed improvements up to 38% compared to traditional autoregressive decoding without compromising quality.

Empirical Results

Through rigorous experimental validation across multiple languages and model sizes, these parallel decoding algorithms demonstrate promising speed gains. Reported speed increases peg up to nearly 2x when methods are scaled on parallel computational resources, preserving translation quality according to BLEU score metrics. Notably, this is achieved without the heavy computational overhead incurred by retraining or engineering densely parameterized models, a feat yet unmatched by other methods in the same domain.

Method Analysis and Tools

The introduction of a Decoding Dependency Graph visualizer (DDGviz) is another significant stride, providing insights into the conditional dependencies of generated tokens during decoding. Additionally, the authors underpin their method's flexibility with further analyses illustrating scaling efficiency and the potential impact on computational resources. Interestingly, the paper discusses the trade-offs and limitations of their approach, acknowledging the need for parallel resources to realize the observed inference speedups.

Conclusion

This paper presents an efficient, model-agnostic method for accelerating transformer inference in machine translation. By leveraging iterative refinement methods from numerical analysis, the approach showcases wall-clock speed improvements while maintaining high translation fidelity, effectively providing a new practical avenue for deploying MT systems in resource-constrained settings.