Evaluating Long-Term Memory Capabilities in LLMs through Extensive Conversational Analysis

Introduction

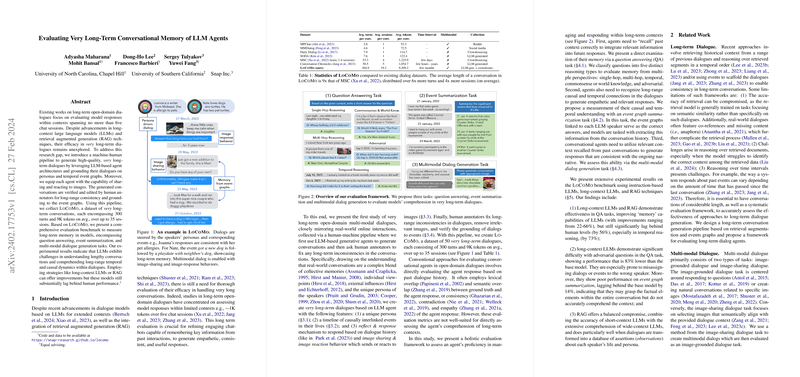

LLMs have demonstrated remarkable capabilities in generating human-like text across a range of applications. However, their effectiveness in handling very long-term dialogues remains relatively unexplored. To bridge this gap, we present a paper that leverages LLM-based agents to generate and analyze very long-term conversations. Through the introduction of the LoCoMo dataset, which consists of dialogues far exceeding the length and complexity of those previously studied, we establish a comprehensive benchmark for evaluating the long-term memory of conversational AI.

The LoCoMo Dataset

The LoCoMo dataset is unique in its depth and breadth, comprising 50 dialogues that extend over 300 turns and 9,000 tokens on average, spread across up to 35 sessions. Unlike existing conversational datasets, LoCoMo incorporates a multi-modal dimension with image sharing and reaction mechanisms, providing a richer context for dialogue. This dataset is generated through a novel machine-human pipeline ensuring high-quality, consistency, and grounding to predefined personas and temporal event graphs. These conversations emulate real-world interactions closely, making them a potent resource for researching very long-term memory in conversational agents.

Evaluation Framework

Our evaluation framework introduces three distinct tasks designed to test different facets of long-term memory and understanding within conversational models:

- Question Answering Task: This task assesses the model's ability to recall and integrate information across dialogues. It spans five reasoning categories, including single-hop, multi-hop, temporal, open-domain knowledge, and adversarial questions.

- Event Summarization Task: This evaluates the model's capacity to comprehend and summarize the causal and temporal dynamics depicted within the conversational event graphs.

- Multi-modal Dialogue Generation Task: This measures the model's proficiency in leveraging past dialogues and related context to generate consistent and relevant responses, also considering multi-modality (text and images).

Experimental Findings

Our experimental analysis reveals several insights into the current state of LLMs in comprehending and remembering information over long dialogues. While long-context LLMs and RAG strategies show promise, particularly in improving QA performance, they still substantially fall short of human-level understanding, especially in tasks requiring sophisticated temporal reasoning and the integration of complex dialogue history. Key findings include:

- Long-context LLMs and RAG offer improvements in QA tasks but lag significantly in areas such as adversarial questioning and event graph summarization.

- Base LLMs struggle with maintaining consistency over lengthy dialogues, often failing to correctly utilize their context.

- Incorporating elements from the multi-modal dialogues enhances conversational agents' ability to produce more relevant and consistent outputs.

Future Directions

The research underscores the need for further advancements in LLMs to effectively model and understand the intricacies of very long-term conversational memory. Future developments may focus on enhancing contextual understanding and the integration of multi-modal data. Additionally, exploring methods to improve the robustness of conversational agents against adversarial inputs and to better capture temporal and causal relationships in dialogues could be fruitful avenues.

Conclusion

Our paper pushes the boundary of current conversational AI research by focusing on very long-term dialogues and introducing the LoCoMo dataset as a benchmark for evaluating the long-term memory capabilities of LLMs. The findings highlight significant challenges in modeling extensive conversational contexts and point towards the necessity for novel methods that can effectively manage and utilize long-term conversational memories.