An Overview of the Paper "Recursively Summarizing Enables Long-Term Dialogue Memory in LLMs"

This paper introduces an innovative approach to address the challenge faced by LLMs in maintaining consistency over long dialogues. The authors present a methodology where dialogue sessions are recursively summarized to enhance the memory capabilities of LLMs, enabling them to handle extended dialog contexts more effectively.

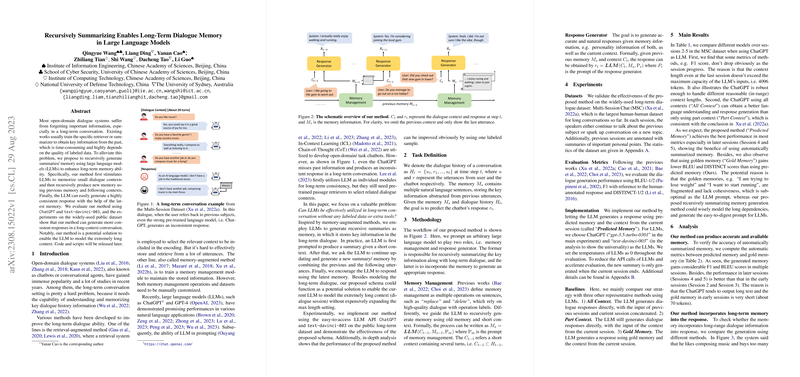

The crux of the paper lies in the novel recursive summarization technique designed to improve long-term memory in LLMs. The research highlights the inherent limitation of LLMs such as GPT-4, which, despite their advanced conversational abilities, can falter over long interactions by forgetting past context and generating inconsistent responses. The proposed solution leverages summarization to create a dynamic memory system that evolves as the conversation progresses. The approach involves using the LLM itself to generate summaries of small dialogue contexts and then recursively update these summaries with new information as the conversation unfolds.

The methodology is evaluated using both open and closed LLMs, with experiments conducted on a public dataset that is widely recognized within the research community. The results demonstrate that the proposed recursive summarization approach successfully produces more consistent and contextually appropriate responses in long-term conversations. Additionally, the method complements LLMs with long-context capabilities (e.g., 8K and 16K context windows) and retrieval-enhanced models, offering a potential solution for managing extremely long dialog contexts.

Noteworthy is the simplicity and effectiveness of the proposed schema, which operates as a plug-in, making it easily integrable into existing systems. The paper provides empirical evidence showing that even in the absence of ground truth memories, the system effectively generates coherent and relevant summaries, suggesting its potential as a robust tool for long-context modeling.

The implications of this work extend beyond dialogue systems. The proposed framework offers a path toward enhancing the overall contextual understanding of LLMs across various applications that require sustained context retention and coherence. Future developments could involve exploring the integration of additional memory augmentation methods or adapting the approach to other long-context tasks such as narrative generation or extensive interactive storytelling.

In conclusion, the paper delivers a valuable contribution to the ongoing research on LLMs by providing a practical and efficient method to augment their memory capabilities. The recursive summarization strategy stands out as a promising direction for ongoing and future investigations into improving the contextual breadth and dialogue continuity in LLMs.