Advancements in Bilingual Text Embeddings: A Dive into Multi-Task Contrastive Learning

Introduction

The evolution of text embedding models has played a pivotal role in propelling NLP applications and research forward. With a significant emphasis on understanding and retrieving semantic meaning from large text corpora, these models have become indispensable. While monolingual models have predominantly been designed with English in mind, the need for effective multilingual models has surged as the digital world becomes increasingly global. Addressing the limitations associated with existing multilingual models, this paper introduces a novel suite of bilingual text embedding models, dedicated to efficiently processing English alongside another target language. The innovation extends to the model's ability to handle extensive text lengths, up to 8192 tokens, and the integration of a multi-task learning strategy to refine its performance on semantic textual similarity (STS) and retrieval tasks.

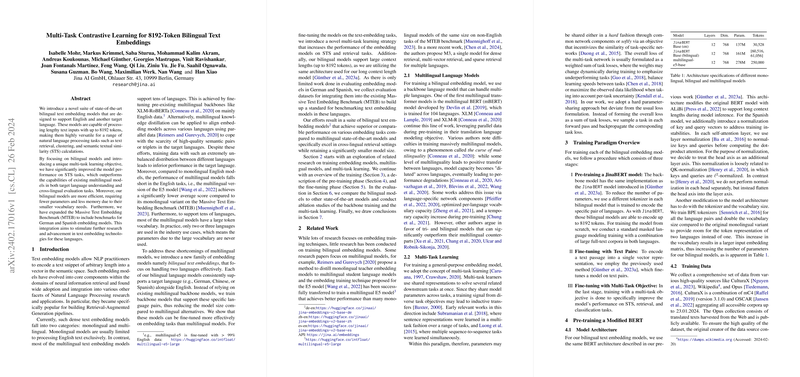

Constructing Bilingual Models

At the core of the paper, the development of bilingual models takes precedence over the more commonly used multilingual frameworks. This strategic decision arises from the observation that most use cases in the industry seldom require the extensive language support that multilingual models offer. Therefore, by focusing on specific language pairs, these bilingual models not only reduce unnecessary computational overhead but also improve the qualitative performance on the targeted languages. To achieve this, the models are built on customized backbones, supporting up to 8192 tokens. These bilingual models undergo a fine-tuning process, enriched with a multi-task learning objective, distinguishing their approach from traditional models.

Multi-Task Learning and Fine-Tuning

Multi-task learning has emerged as a robust method for improving model performance across several related tasks simultaneously. In this paper, a hard parameter-sharing strategy is adopted, alongside an unconventional approach to loss calculation. Rather than aggregating losses from multiple tasks, a specific task is selected in each training iteration, focusing the learning process on the distinct challenges posed by each task. This methodology has shown marked improvements in the model's ability to handle STS and retrieval tasks effectively.

Evaluation and Benchmarks

A comprehensive evaluation framework is established, incorporating the Massive Text Embedding Benchmark (MTEB) extended to include benchmarks for the German and Spanish language pairs. The bilingual models demonstrate superior performance across these tasks when compared to their multilingual counterparts, especially in cross-lingual retrieval settings. This signifies not only the models’ effectiveness in handling bilingual text embedding tasks but also highlights the efficiency gained through the focused nature of the models.

Implications and Future Directions

The research presents profound implications for the development of LLMs, especially in contexts where precise and efficient language understanding is crucial. By proving the efficacy of bilingual models in comparison to multilingual alternatives, a pathway for more focused and potentially more efficient NLP applications is forged. Furthermore, the introduction of a multi-task learning strategy emphasizes the potential for simultaneous improvements across various NLP tasks, setting a precedent for future research in the domain. It opens avenues for exploring further bilingual combinations and refined multi-task learning objectives tailored to specific aspects of language understanding and processing.

Conclusion

The advancements in bilingual text embeddings as outlined in this paper represent a significant step forward in natural language processing capabilities. By addressing the constraints of multilingual models and pivoting towards a more targeted approach, these bilingual models offer a promising avenue for enhancing semantic understanding and retrieval tasks. Coupled with the innovative application of multi-task learning, the models set a new standard for bilingual text processing, fostering continued exploration and improvement in the field.