MTEB: Massive Text Embedding Benchmark

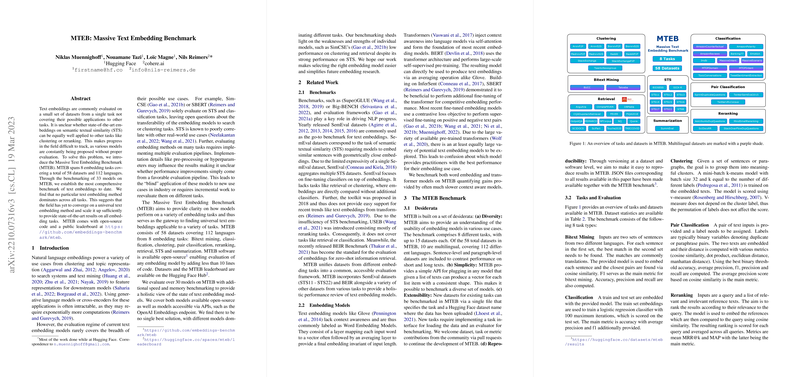

The paper "MTEB: Massive Text Embedding Benchmark" addresses the prevalent challenge in text embeddings evaluation. Typically, text embeddings are assessed on a limited set of datasets, often within a single task domain, such as Semantic Textual Similarity (STS). This narrow focus hinders a comprehensive understanding of these models' generalizability across various tasks. To address this issue, the authors introduce the Massive Text Embedding Benchmark (MTEB), an extensive benchmark comprising eight embedding tasks across 58 datasets and 112 languages, establishing the broadest evaluation framework for text embeddings yet.

Major Contributions

Diverse Task Evaluation

MTEB encompasses a diverse set of embedding tasks beyond the commonly evaluated STS and classification, including:

- Bitext Mining: Identification of parallel sentence pairs across different languages.

- Classification: Training classifiers on embedded text representations to categorize text.

- Clustering: Grouping text inputs into meaningful clusters via embedding similarities.

- Pair Classification: Determining binary labels (e.g., duplicates or paraphrases) for text pairs.

- Reranking: Ranking a list of reference texts against a given query.

- Retrieval: Identifying and ranking relevant documents from a corpus given a query.

- STS: Evaluating sentence pair similarities.

- Summarization: Assessing machine-generated summaries' quality against human-written references.

Comprehensive Dataset Coverage

MTEB's 58 datasets span various languages and text types (sentences, paragraphs, and documents), facilitating a robust assessment of text embeddings' applicability across multiple contexts. The datasets are meticulously curated to include both short and long texts, providing insights into model performance over different text lengths. This extensive dataset pool enables a clear comparison of embeddings across multilingual and monolingual scenarios.

Extensive Model Benchmarking

The authors benchmark 33 models, ranging from traditional word embeddings like GloVe and Komninos to state-of-the-art transformer-based models such as SimCSE, BERT, MiniLM, and SGPT. This wide array includes models both open-sourced and accessible via APIs, delivering a comprehensive performance map. Key findings indicate no single model consistently outperforms others across all tasks, underscoring the field’s progression toward a universal text embedding method.

Detailed Analysis

The analysis reveals significant variability in the models' performance across different tasks. Self-supervised methods trail behind supervised variants, necessitating fine-tuning for competitive performance. They also identify a strong correlation between model size and performance, with larger models generally excelling, albeit at a higher computational cost. Additionally, a notable distinction is observed between models optimized for STS tasks and those geared towards retrieval and search tasks, illustrating the "asymmetric" versus "symmetric" embedding dichotomy.

Multilingual Capabilities

MTEB includes 10 multilingual datasets, offering insight into how models handle cross-lingual tasks. LaBSE and LASER2 exhibit robust performance in bitext mining across multiple languages, although LASER2 shows high performance variance. SGPT-BLOOM-7.1B-msmarco, while tailored for multilingual retrieval, excels in languages seen in pre-training, yet struggles with those it has not encountered.

Practical Implications

Practically, MTEB aids in selecting the most suitable text embeddings for specific applications by providing clear performance metrics across diverse tasks. This allows researchers and practitioners to make informed decisions about model deployment based on their specific requirements, balancing between model performance and computational efficiency.

Future Directions

Looking forward, the authors see potential for expanding MTEB to include longer document datasets, additional multilingual datasets for underrepresented tasks like retrieval, and modalities beyond text, such as image content. They encourage contributions from the research community to further enhance MTEB’s scope and utility.

Conclusion

MTEB stands out as a significant step towards standardizing text embedding evaluations, offering a robust, multifaceted framework that moves beyond the traditional, narrow evaluation regimes. By open-sourcing MTEB and maintaining a public leaderboard, the authors have laid a foundation to fuel advancements in the development and application of text embeddings.

Researchers and practitioners in NLP will find MTEB to be a vital resource in benchmarking and selecting text embeddings suitable for various embedding tasks, contributing to more versatile and effective NLP systems.