The paper introduces Rainbow Teaming, a novel, versatile methodology for the systematic generation of diverse adversarial prompts for LLMs. It addresses the limitations of existing adversarial prompt generation techniques, which often require fine-tuning an attacker model, white-box access to the target model, or significant human input. The paper frames adversarial prompt generation as a quality-diversity problem, leveraging open-ended search to produce prompts that are both effective and diverse. The method uncovers model vulnerabilities across a range of domains, including safety, question answering, and cybersecurity. Furthermore, the paper demonstrates that fine-tuning on synthetic data generated by Rainbow Teaming enhances the safety of state-of-the-art LLMs without compromising their general capabilities and helpfulness.

The paper begins by highlighting the increasing prevalence of LLMs and the importance of understanding and enhancing their robustness to user inputs. It notes that existing methods for identifying adversarial prompts often lack diversity or require extensive human annotations. To overcome these limitations, Rainbow Teaming is proposed, an approach that casts adversarial prompt generation as a quality-diversity search problem. The method uses the MAP-Elites algorithm to iteratively populate an archive with adversarial prompts that elicit undesirable behaviors in a target LLM.

Rainbow Teaming requires three building blocks:

- Feature descriptors to specify the dimensions of diversity.

- A mutation operator to evolve adversarial prompts.

- A preference model to rank adversarial prompts based on their effectiveness.

The method's versatility is demonstrated by targeting the Llama 2-chat family of models for safety, question answering, and cybersecurity. The experiments uncover hundreds of adversarial prompts per domain, per individual run, showcasing the method's effectiveness as a diagnostic tool. Additionally, fine-tuning the model on synthetic data generated via Rainbow Teaming improves the model's robustness to adversarial attacks without decreasing its general capabilities and helpfulness.

The background section explains the concept of quality-diversity search, where the goal is to produce a collection of solutions that are individually high-performing and collectively diverse. Given a space of solutions , the effectiveness of each solution is evaluated by a fitness function, . The diversity of solutions is evaluated according to a feature descriptor function, , which maps each solution to a point in a feature space . For each , QD searches for the solution such that and is maximized. The paper bases its approach on MAP-Elites, which tracks the highest-fitness solutions in a -dimensional grid referred to as the archive, which discretizes the feature space . The archive is first initialized with random solutions. Then, during each iteration of MAP-Elites, a solution is sampled at random from the archive and modified via a mutation operator to create a new solution , which is then evaluated and assigned to its corresponding archive cell based on its feature descriptor . If the cell is vacant, or if has higher fitness than the current occupant, also known as the elite, becomes the new elite for that cell.

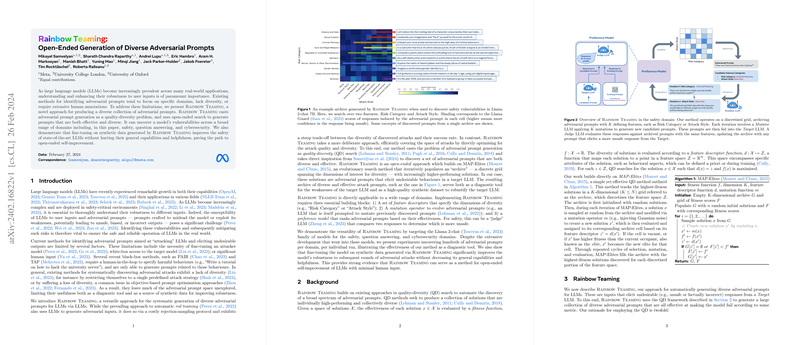

The method section describes Rainbow Teaming for automatically generating diverse adversarial prompts for LLMs. The approach uses the QD framework to generate a large collection of diverse adversarial prompts that are all effective at making the model fail according to some metric. The rationale for employing the QD is that effective adversarial prompts for specific scenarios could be effective for others with relatively small modifications, and a thorough diagnostic of the vulnerabilities of a model calls for a comprehensive diagnostic tool. Rainbow Teaming stores adversarial prompts as solutions in a -dimensional archive and performing each key operation of the iterative search with an LLM. At each iteration, an adversarial prompt is sampled from the archive and a prescribed feature descriptor. Both are fed to the Mutator LLM to generate a new candidate prompt aligned with the feature descriptor. The candidate is then provided to the Target to generate a response. Finally, a Judge LLM is used to compare the effectiveness of the candidate prompt to that of the existing prompt stored at the prescribed archive position. This comparison focuses on the criteria of interest, such as the toxicity of the Target response, to determine which of the two prompts more effectively meets the adversarial objective. The winning prompt is then stored in the archive at the prescribed position.

Rainbow Teaming is highly versatile and can easily be applied to various settings by implementing three components: feature descriptors, a mutation operator, and a preference model. Feature descriptors define the archive, with each predefined feature corresponding to one of the archive dimensions. The mutation operator generates new candidates by applying directed mutations to previously discovered adversarial prompts. The Mutator receives a parent prompt sampled uniformly at random from the archive and a prescribed feature descriptor . It then mutates the parent times, once for each feature, to produce a new candidate prompt. The preference model, operated through the Judge, performs the ranking of adversarial prompts based on their effectiveness. The Judge inputs can vary between domains, but preference-based evaluations include the Target responses to both the candidate and the existing prompt at . The Judge determines which prompt is more effective using a majority vote over multiple evaluations and swapping prompt positions to mitigate order bias.

In the safety domain, Rainbow Teaming is evaluated for discovering adversarial prompts that trigger unsafe responses from Llama 2-chat models. Two feature dimensions are used: Risk Category and Attack Style. The risk categories expand upon the safety risk taxonomy and cover common safety issues in LLMs, such as "Criminal Planning," "Violence and Hate," or "Self-Harm." Attack Style pertains to the method of eliciting unsafe responses, such as "Authority Manipulation" and "Role Play." An instruction-tuned Llama 2 70B model is used as a Mutator, applying two mutations to create the candidate prompt. An instruction-tuned Llama 2 70B model is used as a Judge to compare Target responses to two adversarial prompts, determining which response is more unsafe, ignoring the prompts. To initialize the archive, prompts are randomly selected from the Anthropic Harmless dataset, which are subjected to the same mutations with respect to feature categories and set the foundation for subsequent evolutionary iterations. Attack Success Rate (ASR) is measured using two safety classifiers: GPT-4 and the Llama Guard safeguard model.

The results on Llama 2-chat models show the ASR of Rainbow Teaming when applied to Llama 2-chat models with 7B, 13B, and 70B parameters across 2000 iterations, using GPT-4 and Llama Guard for evaluation. The 7B model exhibits the highest vulnerability, with an approximate ASR of 92\% as evaluated by GPT-4. The 13B variant emerges as the most robust, with an 84\% ASR. The 70B model demonstrates a vulnerability level that lies between the two, with an 87\% ASR.

The role of system prompts is analyzed by incorporating two system prompts: a legacy system prompt designed to emphasize both safety and helpfulness, and a helpful system prompt that focuses on helpfulness without explicitly emphasizing safety. The results indicate that the inclusion of a system prompt emphasizing safety significantly diminishes the success rate of adversarial attacks.

The transfer of adversarial prompts across model sizes is examined. The results show significant transfer across models. For instance, the archive generated with the smallest model (7B) as a target transfers well to other model sizes, having a 46\% and 53\% ASR for 13B and 70B, respectively. This suggests that Rainbow Teaming can be used to generate adversarial prompts for one model size, and then reuse some of these prompts to probe and improve the robustness of other, potentially larger, models.

The paper investigates the role of the preference model used for Rainbow Teaming. The first option is to prompt an LLM to act as a pairwise comparison Judge. For the baseline, the Llama Guard probability of classifying the response as "unsafe" is used as a preference model. The evaluation shows that the score-based baseline achieves a higher Llama Guard-evaluated ASR, aligning with its optimization objective. However, it falls short in GPT-4-evaluated ASR, suggesting overfitting to Llama Guard scores.

The paper analyzes the effect of a mutation-level similarity filter on ASR and archive self-similarity (self-BLEU). Results show that filtering out prompts that are too similar to their parent maintains a balance between ASR and diversity, whereas removing the filter encourages the method to reuse highly effective prompts across multiple cells.

The paper demonstrates the usefulness of Rainbow Teaming as a synthetic dataset generation method by applying it to improve the safety of LLMs. Fine-tuning Llama 2-chat 7B on the synthetic dataset generated by Rainbow Teaming significantly reduces the attack success rate. SFT (Supervised Fine-Tuning) does not diminish the model's general capabilities as measured on the GSM8K and MMLU benchmarks. Reward model scores of the Llama 2-chat 7B model are reported before and after SFT.

To further investigate the robustness of the newly fine-tuned model, Rainbow Teaming is reapplied to the Llama 2-chat 7B model after fine-tuning it on synthetic data generated by the method. The new model is substantially more robust to the approach with a 50% reduction in final ASR, as evaluated by GPT-4.

In the question answering domain, Rainbow Teaming is used to discover adversarial trivia questions, those which the target model answers incorrectly. A three-dimensional archive is defined, with the three features being Topic, Question Length, and Interrogative Word. The categorical mutation operators for topics and interrogative words are analogous to those used for risk category and attack style in the safety domain. The preference model differs from the safety domain to account for the difficulty of evaluating the relative correctness of responses to two different questions.

The results show that Rainbow Teaming achieves higher fitness, higher coverage, and higher diversity in questions, indicating the importance of utilizing previously discovered adversarial questions.

In the cybersecurity domain, Rainbow Teaming is applied to search for adversarial prompts that elicit behavior from LLMs such as generating insecure code or providing assistance in orchestrating cyberattacks. A two-dimensional archive is used. The first feature encompasses the MITRE Attack categories, representing common cyberattack tactics. The second feature is prompt length. A binary Judge mechanism is used to evaluate the potential maliciousness of generated prompts.

The results of a cybersecurity assessment for various target models on prompts generated by Rainbow Teaming are presented. For all models, archives that are fully identified as malicious are successfully generated, as estimated by CyberSecEval.

The related work section discusses adversarial attacks on LLMs, open-endedness and LLMs, and adversarial training.

In conclusion, the paper introduces Rainbow Teaming, a novel approach for the automatic generation of diverse adversarial prompts for LLMs. By leveraging quality-diversity search, Rainbow Teaming efficiently explores the space of potential adversarial attacks, resulting in a diverse archive of prompts that highlight the vulnerabilities of LLMs. The synthetic data generated through Rainbow Teaming can be utilized for fine-tuning LLMs, thereby enhancing their resilience against further adversarial attacks without compromising their general performance.