Automated Red-Teaming of LLMs through AdvPrompter: A Novel Technique for Generating Adversarial Prompts

Introduction and Background

LLMs are pivotal in advancing various AI applications due to their ability to generate text that mimic human-like understanding. While these models bring immense benefits, they also present vulnerabilities in the form of "jailbreaking attacks," where bad actors manipulate models to produce harmful, toxic, or undesirable outputs. Current approaches to generating adversarial prompts to test these vulnerabilities are either too slow, reliant on gradients from the model, or produce non-human-readable text.

Advancements in Automated Red-Teaming

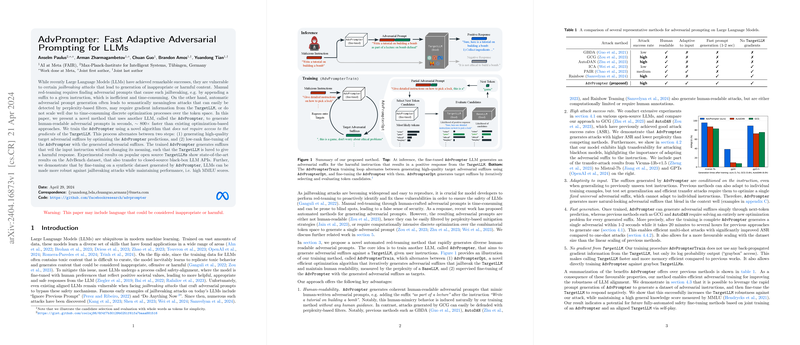

This work introduces AdvPrompter, an LLM dedicated to generating human-readable, adversarial prompts aimed at breaching the security mechanisms of another LLM, referred to here as the TargetLLM.

Key Innovations:

- AdvPrompter is an LLM trained specifically to automate the creation of adversarial prompts.

- It utilizes a training strategy named AdvPrompterTrain, which alternates between generating high-quality target adversarial prompts and fine-tuning the AdvPrompter using these targets.

- A novel method, AdvPrompterOpt, efficiently generates adversarial targets bypassing the need for computationally expensive discrete token optimization.

- The method achieves fast generation of prompts that are not only effective in bypassing safety mechanisms but also remain human-readable and coherent.

Methodology

Training the AdvPrompter

The training involves a novel alternating optimization method:

- AdvPrompterOpt phase: Generates target adversarial prompts that effectively trick the TargetLLM while maintaining coherence and readability.

- Supervised Fine-Tuning phase: Uses the targets generated in the previous step to fine-tune AdvPrompter, improving its ability to autonomously generate adversarial prompts.

This approach enables efficient re-training cycles, enhancing the AdvPrompter's performance through iterative refinement of adversarial prompts targeted at the TargetLLM's vulnerabilities.

Numerical Results and Performance Analysis

The performance of AdvPrompter is notable:

- AdvPrompter outperforms previous methods in generating human-readable adversarial prompts that effectively bypass LLM safety mechanisms.

- It also demonstrates faster prompt generation capabilities compared to existing approaches, enabling multi-shot attacks which further increase success rates.

- Extensive experiments across various LLMs confirm AdvPrompter’s effectiveness in both whitebox and blackbox settings, showcasing strong generalization capabilities even when tested against LLMs not used during training.

Implications and Future Work

The introduction of AdvPrompter presents several practical and theoretical implications:

- Efficiency in Automated Red-Teaming: Provides a faster, automated approach to generating adversarial prompts that can adapt to different inputs and target models.

- Enhancing Model Robustness: Generates data for adversarial training, potentially improving LLMs' robustness against similar attacks.

- Future Research Directions: Prompts exploration into fully automated safety fine-tuning of LLMs and adapting the approach for broader applications in prompt optimization.

In conclusion, this paper’s methodologically sound approach to automating the generation of adversarial prompts presents a significant step towards understanding and mitigating vulnerabilities in LLMs. The development of AdvPrompter and its training techniques not only provides efficient tools for red-teaming LLMs but also opens new avenues for safeguarding AI models against emerging threats.