Analyzing the Impact of Sequence Composition on LLM Pre-Training

This paper addresses the often-overlooked issue of sequence composition during LLM pre-training, specifically focusing on the implications of causal masking and document packing strategies on model performance. While many LLMs concatenate documents into fixed-length sequences with causal masking for efficiency, the influence of this approach on generalization remains underexplored. The authors reveal that this widely-adopted strategy can inadvertently introduce distracting information, negatively impacting LLMing and downstream tasks.

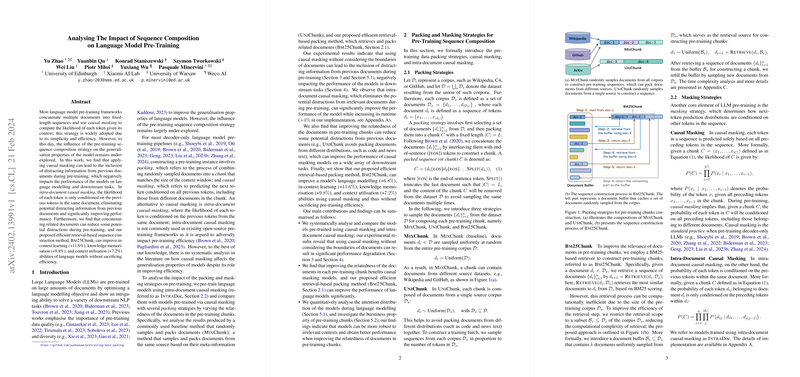

The authors propose intra-document causal masking to mitigate this distraction, where the likelihood of each token is conditioned only on previous tokens within the same document. This approach is contrasted with traditional causal masking, which conditions each token on all preceding tokens, irrespective of document boundaries. The results demonstrate that intra-document causal masking significantly enhances modeling performance but increases runtime by approximately 4%.

The paper further explores document context relevance in pre-training sequences. The paper compares three packing strategies: Mix (random document sampling), Uni (documents from a single source), and Bm25 (retrieval-based relevant document packing). Notably, the Bm25 method, which leverages efficient retrieval to construct more contextually coherent sequences, showed marked improvement across various model capabilities. Significant boosts in in-context learning (up to 11.6%), knowledge memorization (9.8%), and context utilization (7.2%) were observed, evidencing its efficacy.

Quantitative analysis underscored that without considering document boundaries during causal masking, irrelevant information from previous documents is more likely to corrupt model learning, leading to suboptimal task performance. This contributes to the understanding that enhancing document relatedness in sequences can confer benefits, helping models to focus on pertinent context, thus mitigating distractions.

The implications of this research are both practical and theoretical. From a practical perspective, these insights can inform the design of more efficient pre-training pipelines, potentially impacting how LLMs are developed and optimized. Theoretically, the paper raises questions about the relationship between sequence composition, context robustness, and model generalization capabilities, suggesting avenues for further investigation into the nuanced interplay between dataset structure and learning outcomes.

In summary, this paper elucidates the impact of sequence composition strategies on LLM pre-training. By proposing and validating intra-document causal masking and retrieval-based sequence construction, it provides a platform for more focused and efficient model training processes, prompting a reevaluation of standard pre-training practices in the landscape of LLMs. The paper encourages future research into optimizing context relevance in training data to enhance model understanding and execution across diverse tasks.