Fewer Truncations Improve LLMing: Introducing Best-fit Packing

Introduction to Best-fit Packing and Truncation Issues

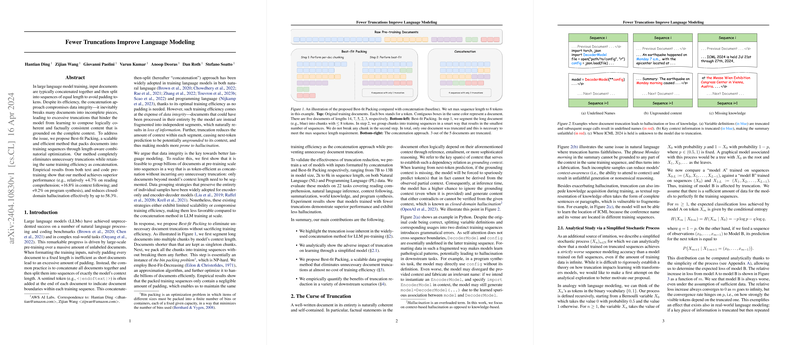

The prevalent training approach for LLMs involves concatenating input documents followed by sequence splitting. This conventional method, although efficient, truncates documents excessively, fragmenting content integral to maintaining coherence and factual consistency. To counteract this, the new approach termed "Best-fit Packing" is proposed. It reframes sequence packing as a combinatorial optimization problem, emphasizing efficient and scalable handling, while substantially reducing unnecessary truncations. The results indicate superior performance and reduced hallucination across various pre-training scenarios.

Best-fit Packing: A Methodological Advancement

Best-fit Packing begins with segmenting documents longer than the model's maximum sequence length into shorter chunks. These chunks are then optimally packed into sequences, ensuring maximum context preservation without further segmentations. The process leverages a bin-packing problem strategy, specifically employing an optimized Best-Fit Decreasing algorithm, which is both scalable and preserves training efficiency comparable to the concatenation method. Remarkably, this method exhibits a 60% runtime improvement at the billion-document scale while achieving compactness levels on par with traditional techniques.

Empirical Validation and Performance Metrics

The empirical validation involved pre-training models on textual as well as code datasets, evaluating them across a spectrum of tasks including reading comprehension, natural language inference, context following, and program synthesis. Key findings are:

- Performance Improvement: Relative improvements of up to +16.8% in context following tasks and +15.0% in program synthesis, validating that fewer truncations correlate with better model performance.

- Reduction in Hallucination: Effective reduction in closed-domain hallucination by up to 58.3%, crucial for tasks like program synthesis where factual accuracy is paramount.

- Scalability and Efficiency: Demonstrated scalability to billions of documents while maintaining compactness and computational efficiency similar to the concatenation approach.

Theoretical Insights and Analytical Validation

The paper also explores a simplified analytical model to demonstrate the adverse effects of truncation on model accuracy. This stochastic model analytically substantiates the empirical observations that truncated training leads to inferior learning outcomes, even when data availability is not a constraint.

Future Directions in LLM Training

Best-fit Packing potentially sets a precedent for future LLM training methodologies that prioritize data integrity without compromising efficiency. It opens avenues for exploring additional data packing strategies and their integration into standard LLM training pipelines. Additionally, this approach could enhance not only base model pre-training but also task-specific fine-tuning phases.

Conclusion: Towards More Coherent and Less Hallucinatory LLMs

In summary, Best-fit Packing addresses a critical flaw in the traditional LLM training regimen by mitigating excessive document truncation, thus enhancing logical coherence and factual consistency across model outputs. This method not only supports existing findings regarding the importance of comprehensive context in model training but also pioneers an efficient, scalable solution to a previously overlooked but significant problem.