Introducing ∞Bench: A New Benchmark for Evaluating Long-Context Processing in LLMs

Overview

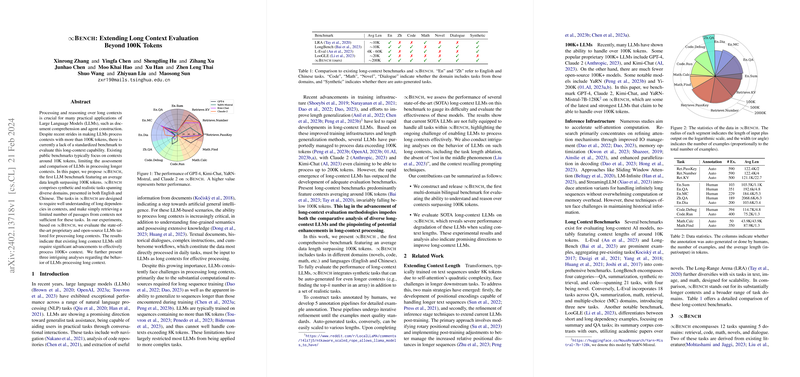

The rapid advancements in LLMs have significantly enhanced their performance across various NLP tasks. Yet, the challenge of effectively processing and reasoning over long contexts exceeding 100K tokens persists, underscoring the necessity for comprehensive benchmarks tailored to evaluate such capabilities. This paper presents ∞Bench, the pioneering benchmark that surpasses the conventional context length, featuring a diverse set of tasks across multiple domains and languages, aimed at pushing the boundaries of LLMs in handling long-context information.

Existing Benchmarks and Their Limitations

Prior benchmarks have predominantly focused on contexts approximately around 10K tokens, limiting the evaluation of LLMs' ability to process and understand significantly longer texts. Comparatively, ∞Bench stands out not only for its unprecedented average data length but also for its inclusion of tasks in both English and Chinese, spanning domains such as novels, code, mathematics, and dialogue, among others.

Evolving the Evaluation of Long-Context LLMs

∞Bench addresses the critical need for standardized evaluation in the domain of long-context processing by integrating tasks that challenge models beyond mere retrieval practices. These tasks, both synthetic and human-annotated, are designed to test LLMs' depth of understanding and reasoning over complex, lengthy contexts. The benchmark represents an effort to simulate real-world applications requiring comprehensive understanding, such as document summarization, question answering, and code debugging, all within the realms of extensive textual content.

Task Design and Implementation

The tasks within ∞Bench cover a wide spectrum, categorized under realistic and synthetic contexts. Realistic tasks draw from domains like novel-based reasoning and dialogue analysis, while synthetic tasks probe models on their ability to process artificial constructs, such as extended numerical retrieval and sequential computation. This blend of task types is formulated to challenge and assess the long-context capabilities of LLMs under varied, demanding scenarios.

Experimental Insights

Experimental evaluations using ∞Bench reveal significant insights into the current state and limitations of SOTA LLMs in processing extended contexts. The observed degradation in performance as the context length increases underscores an imperative for advanced methodologies aimed at bolstering long-context understanding and processing efficiency. Moreover, the intriguing findings from length ablation and context recalling experiments offer promising directions for future research and model improvement.

The Road Ahead

The introduction of ∞Bench marks a crucial step towards refining the assessment of LLMs in handling long contexts. The insights garnered from the benchmark's initial deployment highlight the necessity for continued innovation in model architecture and training methodologies. As LLMs evolve, so too must the benchmarks used to evaluate their capabilities, ensuring they remain relevant and challenging in the face of rapid technological advancement.

Acknowledging Constraints

While ∞Bench provides a novel approach to evaluating LLMs, its scope, like all benchmarks, is confined by the selection and design of its tasks. Future iterations may need to explore even longer contexts or diversify the task domains further to offer a more exhaustive assessment of LLM capabilities. Additionally, the precise impact of benchmark constraints and scoring criteria on model evaluation warrants careful consideration.

Ethical Considerations

In developing and deploying ∞Bench, meticulous attention to ethical implications is paramount. Efforts to mitigate the potential for bias, misuse, and sensitivity in task content are essential, underscoring the balance between advancing AI capabilities and ensuring responsible development practices.

∞Bench represents a significant advancement in the field of LLM evaluation, offering a rigorous, comprehensive benchmark designed specifically for the assessment of long-context processing. The insights and challenges highlighted by this benchmark pave the way for future research and development aimed at unlocking the full potential of LLMs in understanding and reasoning over extensive texts.