Enhancing LLMs with Active Learning in Retrieval-Augmented Generation

Introduction to ActiveRAG

In the evolving landscape of LLMs, Retrieval-Augmented Generation (RAG) models have carved a niche by facilitating the resolution of knowledge-intensive tasks. Traditional RAG models, however, primarily treat LLMs as passive receptors of external knowledge, thereby limiting their potential to assimilate and comprehend external knowledge in depth. This paper introduces ActiveRAG, an advanced RAG framework that transcends passive knowledge acquisition by integrating an active learning mechanism. This mechanism fosters a deeper understanding of external knowledge through the Knowledge Construction process, which is then synthesized with the model's intrinsic cognition through the novel Cognitive Nexus mechanism. The empirical results underline ActiveRAG's superior performance over existing RAG models, marking a significant advancement in the field of knowledge-intensive question answering.

Overview of Related Work

The domain of RAG models spans advancements that aim to enhance LLMs' capabilities by incorporating external knowledge bases. These models have demonstrated their effectiveness across numerous NLP tasks, yet often grapple with challenges like the incorporation of noisy information from retrieved passages. Prior solutions have sought to mitigate this through refined passage selection and summarization techniques, albeit without fundamentally addressing the passive stance of LLMs in the knowledge acquisition process. This gap in actively engaging LLMs in knowledge comprehension and synthesis forms the crux of our investigation.

Methodology of ActiveRAG

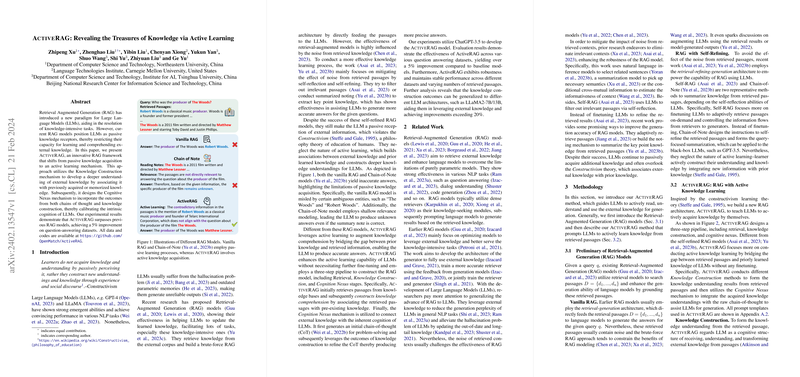

ActiveRAG introduces a structured approach to transforming LLMs from passive receivers to active learners of external information. This transformation is achieved via a meticulous three-step process:

- Retrieval: It commences with sourcing relevant passages from a knowledge database, forming the foundation for subsequent knowledge synthesis.

- Knowledge Construction: This pivotal stage involves the assimilation of retrieved information, guided by four distinct agents focusing on semantic association, epistemic anchoring, logical reasoning, and cognitive alignment. Each agent plays a critical role in building a multi-faceted understanding of the information at hand.

- Cognitive Nexus: Here, the synthesized knowledge is seamlessly integrated into the LLM's cognitive process, enhancing its capacity to generate accurate and contextually enriched responses.

This methodology extends beyond mere passage retrieval, introducing a framework where LLMs actively engage with and process external knowledge, aligning closely with principles of constructivism in learning.

Experimental Insights and Implications

Experiments employing ActiveRAG demonstrate a notable improvement in question-answering accuracy, outperforming baseline models by a significant margin across diverse datasets. These findings not only underscore the efficacy of ActiveRAG's methodology but also reveal its robustness and adaptability in handling information from various sources and domains.

Additionally, ablation studies shed light on the distinct contributions of the Knowledge Construction and Cognitive Nexus mechanisms, reinforcing the utility of active learning in enhancing the LLMs' performance. The outcomes of integrating different knowledge construction methods reveal promising avenues for further refining the ActiveRAG model.

Future Directions and Limitations

While ActiveRAG presents a compelling advancement in LLMs' capability to harness external knowledge actively, it also opens up several avenues for future exploration. Potential directions include optimizing the efficiency of the knowledge retrieval and construction process and broadening the scope of LLMs' active engagement with diverse types of knowledge sources.

It is noteworthy, however, that the implementation of ActiveRAG entails additional computational steps and associated costs, which might pose challenges in scaling the model for widespread applications. Moreover, the model's dependence on the quality and relevance of retrieved passages remains a critical factor in determining its efficacy.

Conclusion

ActiveRAG stands as a significant step forward in the field of Retrieval-Augmented Generation models, moving towards a more dynamic and interactive form of knowledge acquisition and utilization by LLMs. Through its innovative approach, ActiveRAG not only enhances LLMs' understanding and output quality but also contributes to the broader discourse on integrating active learning mechanisms within AI systems. As the field progresses, the exploration of active learning paradigms such as ActiveRAG will undoubtedly play a pivotal role in shaping the future of knowledge-intensive computational models.