Enhancing Preference Optimization in LLMs with DPO-Positive

Introduction

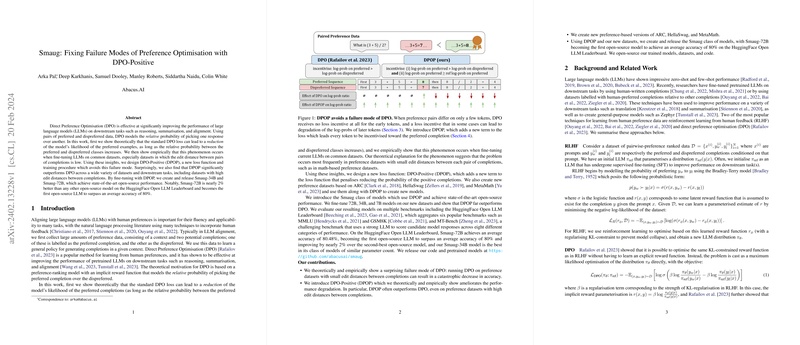

The evolution of LLMs has underscored the critical importance of aligning these models with human preferences to ensure their fluency and effectiveness across various tasks. In response to this need, Direct Preference Optimization (DPO) has emerged as a key technique, leveraging preferred and dispreferred data pairs to model the relative probability of one response over another. However, this paper identifies a notable limitation within the standard DPO approach – a potential reduction in the model’s likelihood for the preferred examples, particularly evident in datasets with small edit distances between pairs of completions. To address this, we introduce DPO-Positive (DPOP), a novel loss function and training methodology designed to overcome this failure mode, demonstrating significant improvements over DPO across diverse datasets and tasks.

Background and Related Work

The development of LLMs has been significantly aided by methods capable of integrating human-written completions or human-preferred completions to fine-tune models for enhanced performance on downstream tasks. Among these methods, reinforcement learning from human feedback (RLHF) and DPO are prominent. DPO, especially, has gained traction for its ability to directly optimize preferences without explicit reward function learning, focusing on maximizing the likelihood of preferred completions relative to dispreferred ones.

Failure Mode of DPO

A deeper analysis into the functionality of DPO reveals a critical oversight: the potential for a reduced likelihood of preferred examples, especially in scenarios where the preferred and dispreferred completions closely resemble each other textually. This paper theorizes and empirically validates that in datasets where the edit distance between preference pairs is minimal, the standard DPO methodology could inadvertently deprioritize the preferred completions, leading to a degradation in model performance.

Introducing DPO-Positive (DPOP)

To counteract the identified failure mode of DPO, DPOP introduces a corrective penalty term to the loss function, ensuring that the model's likelihood for preferred completions does not diminish. This innovation not only preserves the integrity of the preferred data but also elevates DPOP's effectiveness across a broad spectrum of datasets, including those with significant differences between completion pairs. The empirical results underscore DPOP's superior performance, notably in the creation of the Smaug class of models which exhibit state-of-the-art open-source achievements.

Contribution and Results

The paper’s contributions are manifold, offering a theoretical and empirical dissection of a DPO failure mode, the formulation of DPOP as a resilient alternative, and the developmental groundwork for the Smaug class of models, which push the boundaries of open-source LLM performance. Particularly, Smaug-72B sets a new benchmark by achieving an unprecedented average accuracy rate on the HuggingFace Open LLM Leaderboard.

Conclusion and Future Directions

While DPOP marks a significant stride toward refining preference optimization in LLMs, this work also acknowledges the limitations inherent in the scale and linguistic focus of tested datasets. The research paves the way for further explorations into preference-based LLM fine-tuning, stressing the potential for DPOP's application across a more diverse range of datasets, including non-English languages. The paper's findings not only contribute to the ongoing development of more accurate and aligned LLMs but also highlight the importance of continual evaluation and adaptation of existing methodologies to address emerging challenges.

Limitations and Impact

Acknowledging the potential misuse of such advanced techniques and models for generating harmful content is crucial. Yet, the focus on mathematical and reasoning contexts, coupled with a deeper understanding of preference optimization, leans towards a positive societal impact. The release of the Smaug models, while being a significant contribution to the AI research community, is done with the consideration of their comparative performance to prioritize safety and responsible use.

This work stands as a testament to the dynamic nature of AI research, where the detection of methodological weaknesses becomes the foundation for innovation, driving the field towards the development of LLMs that are not only powerful but also closely aligned with human values and preferences.