Summary of the Direct Preference Optimization Algorithm

Introduction to LLM Tuning Challenges

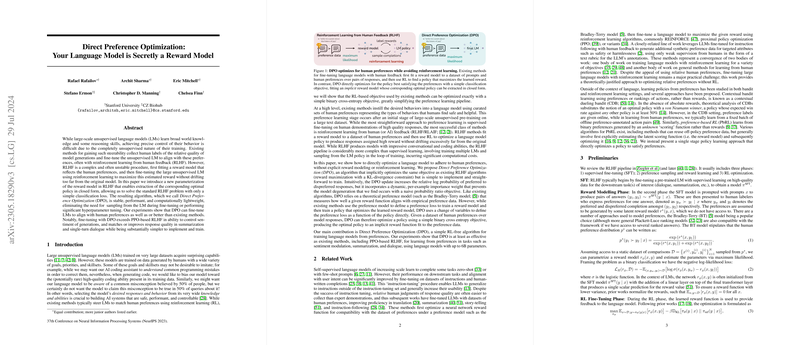

Large-scale unsupervised LLMs have exhibited impressive capabilities, having been trained on massive datasets. However, precisely controlling their behavior remains difficult due to the varying intentions behind human-generated datasets. These models frequently adopt undesirable traits, and current methods aiming to fine-tune these models to reflect human preferences involve complex reinforcement learning (RL) algorithms that make the process computationally expensive and unstable. Recognizing the need for safer and more controllable AI systems, researchers have examined the possibility of bypassing explicit reward modeling and avoidance of reinforcement learning.

Direct Preference Optimization Approach

The paper introduces Direct Preference Optimization (DPO), a stable, performant, and computationally efficient algorithm for fine-tuning LLMs (LMs) to human preferences without the need for reward modeling or reinforcement learning. DPO directly optimizes policies to satisfy human preferences using a simple classification objective. It works by increasing the log probability of preferred responses compared to dispreferred responses, employing a dynamic per-example weight. DPO's ability to align with human preferences is shown to be comparable or superior to established methods like RL with proximal policy optimization (PPO), especially in tasks like sentiment modulation, summarization, and single-turn dialogues.

Theoretical Underpinnings and Advantages

DPO's framework is based on theoretical models that measure how well a given reward function aligns with empirical preference data, such as the Bradley-Terry model. This strategy allows DPO to avoid the instabilities associated with actor-critic algorithms commonly used in RL-based fine-tuning methods. It also addresses the over-specification issue, meaning multiple reward functions can induce the same preference distribution, and promotes generalization across different tasks without the risk of overfitting. Furthermore, DPO's simple nature avoids the need for complex hyperparameter tuning that other RL methods may require.

Empirical Validation and Future Potential

Experiments conducted with DPO show its effectiveness compared to alternative methods like PPO and Unlikelihood training, offering at least similar, if not better, alignment with human preferences. These results were demonstrated in tasks like generating summaries and dialogues, where models tuned with DPO exceeded performance benchmarks. There's potential for DPO to be applied to models even larger than the 6 billion parameters tested, and its efficacy opens avenues for numerous applications beyond fine-tuning LMs, such as in generating models in various modalities. While the results are promising, further research is needed to assess the robustness of DPO, including how the policies perform out of distribution and how reward over-optimization may present itself. Additionally, the accuracy of using automated systems, like GPT-4, for evaluation purposes could be a focus for continued investigation.