Understanding the Role of Instruction Diversity in LLM Generalization to Unseen Tasks

Introduction

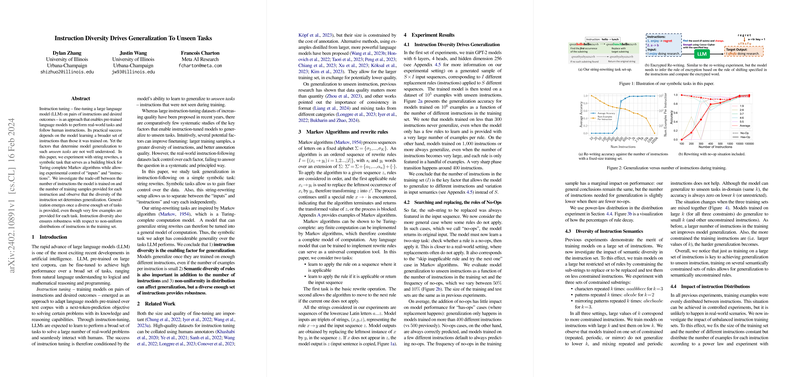

The proliferation of LLMs has ushered in remarkable advancements in artificial intelligence, with the capability to fine-tune pre-trained models via instruction tuning becoming a cornerstone for applying LLMs to a vast array of real-world tasks. The essence of instruction tuning lies in its potential to teach models to interpret and categorically follow human instructions, extending beyond the confines of their original training data. However, the dynamics influencing the capability of these models to generalize across unseen tasks - particularly under conditions of minimal example provision per task - remain underexplored.

This paper meticulously explores the impact of instruction diversity on the generalization of LLMs to tasks beyond their training ambit, employing a series of experiments centered around the symbolic task of string rewrites. This model of paper is not only inspired by the Turing complete Markov algorithms but also allows for granular control over the "inputs" and "instructions", distinguishing between the two for an incisive analysis.

Experiment Setup

The scope of this research spans several comparative analyses, including the trade-off between the number of instructions and the sample size for each, the implications of embedding "no-op" functionalities, and the influence of example distribution skewness within training data. At the heart of the experiments is the GPT-2 model, trained on a diverse palette of generated string rewrite rules, to ascertain the threshold of instruction diversity requisite for noteworthy generalization.

Key findings from the experiments unequivocally point towards the paramount importance of instruction diversity. The emergence of generalization to unseen tasks becomes pronounced only after surpassing a critical threshold of instruction diversity, with the model showcasing robustness against non-uniform training set distributions thereafter. Notably, the experiments reveal that:

- Instruction diversity catalyzes model generalization even with a limited number of examples per instruction.

- Semantic diversity within the instruction set further augments the model's generalization capabilities.

- Models exhibit resilience to the adverse effects of unbalanced training set distributions when the spectrum of instruction diversity is sufficiently broad.

Theoretical and Practical Implications

This research contributes significantly to the theoretical understanding of the factors that enhance LLMs' ability to undertake tasks beyond their training scope. The revelation that instruction diversity serves as a critical enabler for model generalization underscores the necessity to revisit and possibly redefine approaches to instruction tuning.

From a practical standpoint, the insights gleaned from this paper have profound implications for the deployment of LLMs in real-world applications. By elucidating the conditions under which LLMs are capable of adapting to new instructions, the research paves the way for more effective and efficient use of pre-trained models across diverse tasks, minimizing the dependence on extensive, task-specific training data.

Future Directions

While this paper lays foundational groundwork, it also delineates the contours for future research in this domain. Future work could aim to extend these findings through the investigation of instruction diversity in more complex, real-world tasks and datasets. Additionally, theoretical models that can predict generalization performance based on instruction diversity and training set characteristics would greatly enhance our understanding and capacity to engineer more adaptable LLMs.

In summary, this paper represents a pivotal step towards untangling the nuanced dynamics of instruction tuning and model generalization, advocating for a strategic emphasis on instruction diversity. Through meticulous experimentation and insightful analysis, the work opens new avenues for the development and application of LLMs, with the potential to significantly bolster their utility and effectiveness in addressing a broader spectrum of computational problems.

References

This section is typically included in academic papers and formal reports but is omitted here for brevity.