Introduction to Multilingual Instruction Tuning

With the rise of LLMs, enhancing their multilingual capabilities has become a focal point for global usability. When these models are fine-tuned with instructions and corresponding responses—a process known as instruction tuning—they ideally learn to follow instructions more effectively. Despite the potential of such models, most instruction tuning has been predominantly conducted using English language examples. As LLMs continue to serve a worldwide user base, the need for multilingual instruction tuning—that is, fine-tuning models with data from multiple languages—becomes paramount.

Cross-Lingual Transfer and Its Implications

Prior research has suggested that models fine-tuned in one language can attain certain capabilities in other languages—a phenomenon known as cross-lingual transfer. The paper under discussion brings to light new insights regarding this process, specifically within the field of instruction tuning for multilingual LLMs. A key discovery is that languages, when utilized individually for tuning, can imbue the model with instruction-following abilities that transcend the language of fine-tuning. English, Italian, and Spanish were found to exhibit robust multilingual transfer capabilities.

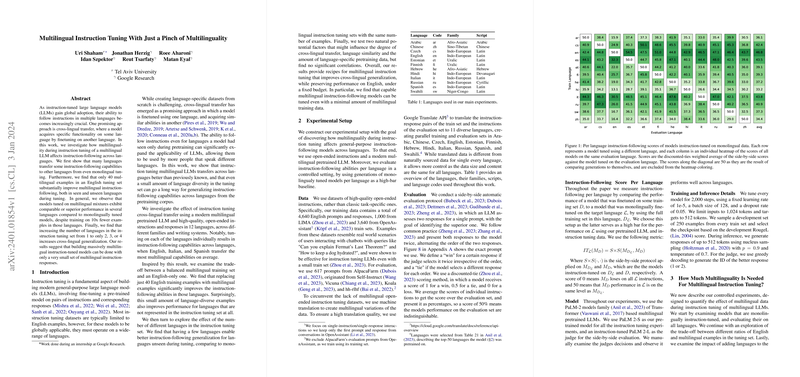

The Surprising Effectiveness of Limited Multilinguality

The research presents a striking finding: the inclusion of multilingual examples in what is primarily an English instruction tuning set can substantially improve a model's multilingual instruction-following skills. Moreover, this broadening of capabilities does not solely benefit languages included in the instruction tuning set; it also enhances performance in languages that the model was only exposed to during its pretraining phase. Intriguingly, the paper found that this improvement occurs with the inclusion of as few as 40 multilingual examples—a surprising testament to the power of even a modest amount of language diversity during instruction tuning.

Toward a Massively Multilingual Future

The implications of these findings are profound for the development of globally-oriented LLMs. For one, achieving multilinguality in instruction-tuned models does not necessitate exhaustive multilingual training data. Quite the contrary, models could be fine-tuned with a minimal set of instructions in various languages and still manage to follow directions across a plethora of languages they were not explicitly trained on. The paper further explores the impact of the number of languages in the tuning set and the fraction of language-specific data used during pretraining, but it does not find strong correlations with cross-lingual transfer effectiveness.

In summary, this paper posits that cross-lingual transfer via instruction tuning has the potential to pave the way for efficiently developing capable multilingual LLMs. These findings could have a significant impact on the way models are fine-tuned, leveraging minimal but diverse multilingual data to cater to a vast array of languages—thus making advanced AI technologies more accessible to users around the globe.