The paper "RS-DPO: A Hybrid Rejection Sampling and Direct Preference Optimization Method for Alignment of LLMs" explores the challenges and advancements in the domain of aligning LLMs with user intent through reinforcement learning from human feedback (RLHF). Commonly, Proximal Policy Optimization (PPO) is utilized for RLHF but it presents issues of instability, need for significant hyperparameter tuning, and high computational cost during the alignment phase.

To address these obstacles, Direct Preference Optimization (DPO) has been proposed as an alternative technique. However, DPO itself has limitations as it relies on contrastive responses generated from human annotators and alternative LLMs rather than the policy model, which hampers its effectiveness.

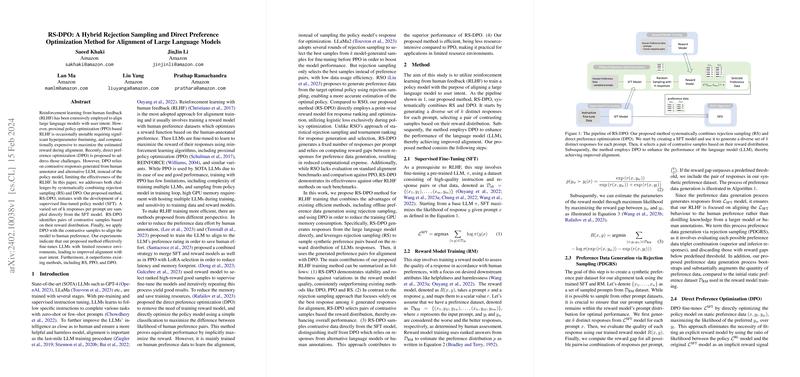

The authors of the paper propose a novel hybrid approach termed RS-DPO, which integrates Rejection Sampling (RS) with DPO to leverage the strengths of both methods. The process begins with training a supervised fine-tuned policy model (SFT). From this SFT model, a diverse set of responses are sampled for each prompt. Rejection Sampling is then employed to identify pairs of contrastive samples based on their reward distribution. Ultimately, DPO is applied to these selected samples to align the LLM with human preferences.

Experimental results presented in the paper demonstrate that RS-DPO is effective in fine-tuning LLMs, even in resource-constrained environments. It showcases superior performance compared to existing methods including RS, PPO, and DPO. The hybrid method thus offers a more stable and computationally efficient approach for the alignment of LLMs, leading to improved adherence to user intent with reduced resource expenditure.