Aya Dataset: An Open-Access Collection for Multilingual Instruction Tuning

(2402.06619)Abstract

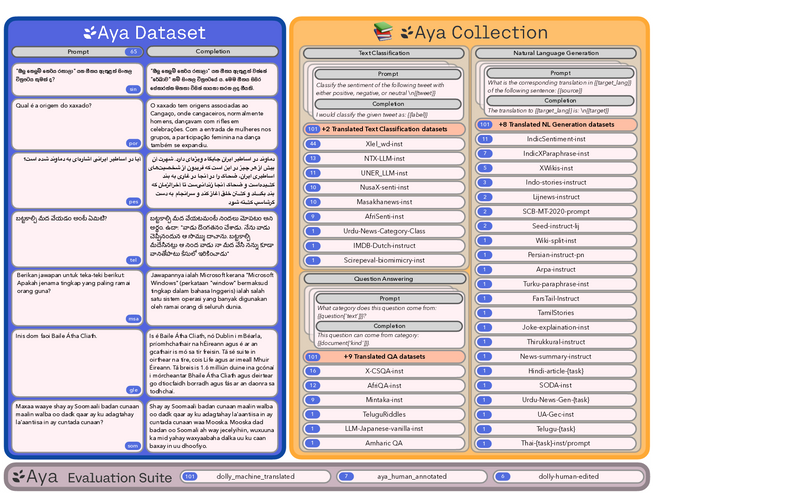

Datasets are foundational to many breakthroughs in modern artificial intelligence. Many recent achievements in the space of NLP can be attributed to the finetuning of pre-trained models on a diverse set of tasks that enables a LLM to respond to instructions. Instruction fine-tuning (IFT) requires specifically constructed and annotated datasets. However, existing datasets are almost all in the English language. In this work, our primary goal is to bridge the language gap by building a human-curated instruction-following dataset spanning 65 languages. We worked with fluent speakers of languages from around the world to collect natural instances of instructions and completions. Furthermore, we create the most extensive multilingual collection to date, comprising 513 million instances through templating and translating existing datasets across 114 languages. In total, we contribute four key resources: we develop and open-source the Aya Annotation Platform, the Aya Dataset, the Aya Collection, and the Aya Evaluation Suite. The Aya initiative also serves as a valuable case study in participatory research, involving collaborators from 119 countries. We see this as a valuable framework for future research collaborations that aim to bridge gaps in resources.

Overview

-

The Aya Initiative introduces an open-access collection for multilingual instruction tuning, aiming to democratize language technologies by including underrepresented and low-resource languages.

-

Developed through a participatory research methodology, the Aya Dataset encompasses 204,114 high-quality annotations in 65 languages, while the Aya Collection features 513 million instances across 114 languages.

-

The initiative ensures balanced representation across languages and emphasizes the importance of high-quality, human-annotated data and the inclusion of cultural and contextual nuances.

-

The Aya Evaluation Suite is designed to assess LLMs' (LLMs) abilities across diverse linguistic contexts, advancing multilingual natural language understanding and inclusivity.

Bridging Linguistic Gaps: The Aya Initiative for Multilingual Instruction Tuning

Introduction to the Aya Initiative

The democratization of language technologies requires concerted efforts to include underrepresented and low-resource languages. The Aya Initiative presents a novel approach to this challenge by curating a substantial, human-annotated, and open-access collection aimed explicitly at multilingual instruction tuning (IFT). This initiative introduces the Aya Dataset, Collection, and Evaluation Suite, developed through a participatory research methodology involving fluent speakers across 119 countries. In total, the Aya Dataset encompasses 204,114 high-quality annotations spanning 65 languages, while the Aya Collection extends further with 513 million instances across 114 languages, making it the most comprehensive multilingual collection for IFT to date. The collection not only includes human-curated data but also leverages templating and translating existing datasets, significantly enhancing the linguistic diversity available for training and evaluating LLMs.

Dataset Composition and Development

The construction of the Aya Dataset and Collection was guided by a few key principles: inclusivity of low-resourced languages, high-quality human annotations, and the fostering of a global community of contributors. The dataset segments include original annotations, re-annotations, and translations across various languages, with an emphasis on ensuring comprehensive representation. This approach addresses the scarcity of data for many languages which hitherto had limited visibility in NLP research.

Analysis and Implications

Upon analysis, the Aya Dataset and Collection show a balanced representation across high, mid, and low-resource languages, which is crucial for reducing biases in LLMs and improving their performance across a wide linguistic spectrum. Notably, the project reveals a positive correlation between the detailed content of prompts/completions and the perceived quality of annotations, underscoring the importance of the richness of data in instruction tuning scenarios. Additionally, the initiative highlights a critical need for addressing the skewed distribution of contributions and ensuring that data for each language captures a wide array of cultural and contextual nuances.

Evaluation Suite and Future Directions

The Aya Evaluation Suite introduces a novel set for assessing LLMs' abilities to understand and generate language across diverse linguistic contexts, crucial for advancing multilingual natural language understanding. It underscores the importance of contextually and culturally relevant prompts for evaluating model performance, a step forward in creating truly global and inclusive language technologies.

Conclusion

The Aya Initiative represents a significant stride towards bridging the linguistic gaps in NLP research and development. By leveraging a participatory research framework and focusing on underrepresented languages, the Aya Dataset, Collection, and Evaluation Suite mark a pivotal advancement in the pursuit of equitable and inclusive language technology. As the project continues to evolve, it is expected to further ignite the conversation around the representation of low-resource languages in AI, encouraging more inclusive research practices and technological advancements tailored to the diverse linguistic landscape globally.