Introduction

LLMs have played a crucial role in enhancing performance on reasoning tasks. Yet their benefits are typically offset by high computational costs due to extensive generation requirements and multiple model instances interacting over several rounds. A pressing issue is the lack of a final, efficient model for inference, as multi-agent frameworks do not consolidate reasoning skills into a standalone model.

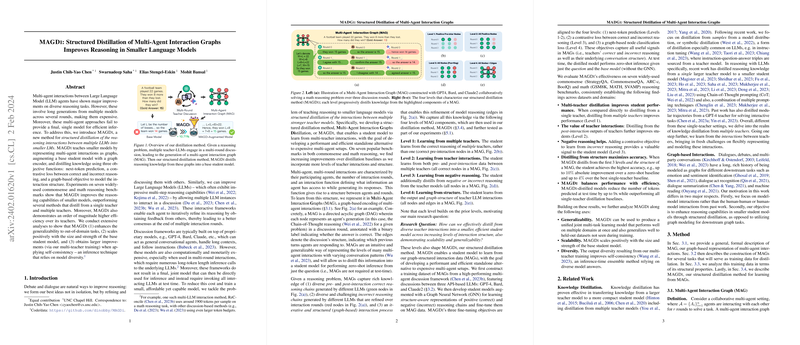

Multi-Agent Distillation

To combat these challenges, this paper introduces Multi-Agent Interaction Graphs Distillation (MAGD I). This novel approach structures the distillation of reasoning interactions from numerous LLMs into more compact LLMs. It employs a graph encoder within a student model and distills knowledge using three tailored objective functions. These include next-token prediction, contrastive loss between correct/incorrect reasoning, and a graph-based objective to encompass interaction structures.

Experimentation and Results

The efficacy of MAGD I has been rigorously tested on seven prominent reasoning benchmarks. The evaluations show that the methodology not only improves smaller models' reasoning abilities substantially but also maintains operationally efficiency levels that are an order of magnitude better than the multi-agent teacher setups. For instance, MAGD I-distilled models reduce the token generation by up to 9x at inference time, while surpassing all single-teacher distillation baselines in performance.

Scalability and Generalizability

Further exploration of MAGD I's applications showcases that its benefits carry over to generalizability and scalability across various domains and model sizes. When utilized to construct a universal multi-task learning model, MAGD I performs comparably on multiple tasks simultaneously and exhibits competence even on out-of-domain tasks. Moreover, this method scales positively with the underlying student model's size and sophistication, indicating its long-term applicability as foundational models evolve.

Diversity and Inference Techniques

MAGD I also has the potential to enhance model diversity, which is demonstrated through the method's compatibility with self-consistency inference techniques that depend on varied model outputs. The student models, trained via MAGD I, achieve notable performance jumps when used in combination with such ensemble methods, suggesting that structured distillation may imbue models with a richer response spectrum.

Conclusion

This paper posits the innovative MAGD I method as a solution to infuse LLMs' reasoning prowess into smaller models without incurring prohibitive computational expenses. The empirical results underscore the potential of structured distillation in creating efficient and robust reasoning models, capable of transfer learning and preserving diversity for advanced inference applications.