Introduction

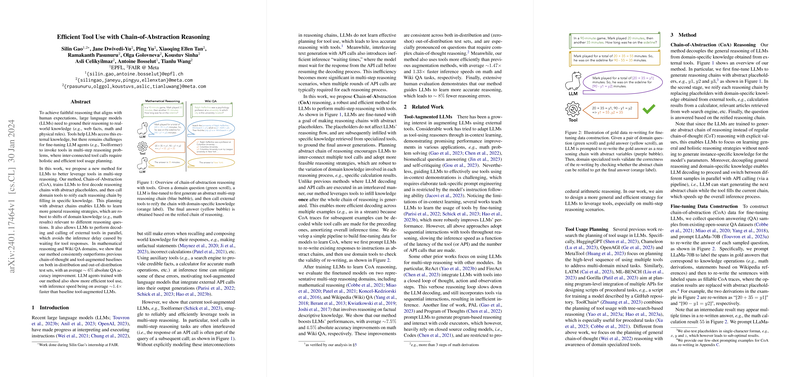

In an effort to elevate the capabilities of LLMs in complex reasoning tasks, recent research has introduced a novel approach titled "Chain-of-Abstraction" (CoA) reasoning. This framework is designed to refine and expedite multi-step problem-solving by utilizing abstract placeholders in reasoning chains, which are subsequently filled with precise data through domain-specific tools. This strategy contrasts markedly with existing models, where the interleaving of text generation with API calls tends to introduce significant inefficiencies.

Methodology

The key innovation of the CoA approach lies in its two-stage training process. Initially, LLMs are fine-tuned to produce reasoning chains utilizing abstract placeholders. In the ensuing phase, these constructs are 'reified' using domain-specific knowledge sourced from external tools. This decoupling of general reasoning from domain-specific knowledge facilitates a more generalized and holistic strategy, enhancing performance robustness. Furthermore, this model allows for simultaneous decoding across multiple samples, thereby improving overall inference speed.

Performance Evaluation

Applying CoA reasoning to a variety of LLM architectures, the researchers assessed its efficacy in mathematical reasoning and Wikipedia-based question answering domains. Their findings are notable: approximately 6% absolute QA accuracy improvement in comparison to traditional methods, with inference speeds about 1.4× faster. The performance gains were consistently observed across in-distribution and out-of-distribution tests, emphasizing the method's robustness. Additionally, extensive human evaluations underscored that CoA reasoning not only excels in precision but also results in approximately 8% fewer reasoning errors.

Relevance and Potential

This research paradigm introduces a shift in existing LLM methodologies, moving towards a more efficient system that separates the generation of reasoning chains from the execution of specialized knowledge operations. These findings suggest that by employing CoA reasoning, sizable improvements in both the accuracy of complex, multi-step reasoning tasks and the speed of inference can be achieved. Moreover, the method's success in both mathematical and factual domains lends credence to its versatility and adaptability to additional areas where complex reasoning is imperative. The potential impact of CoA reasoning extends to broadening the scope of LLM applications, making them more reliable and efficient partners in problem-solving across diverse knowledge domains.