Knowledge-Driven CoT: Exploring Faithful Reasoning in LLMs for Knowledge-intensive Question Answering

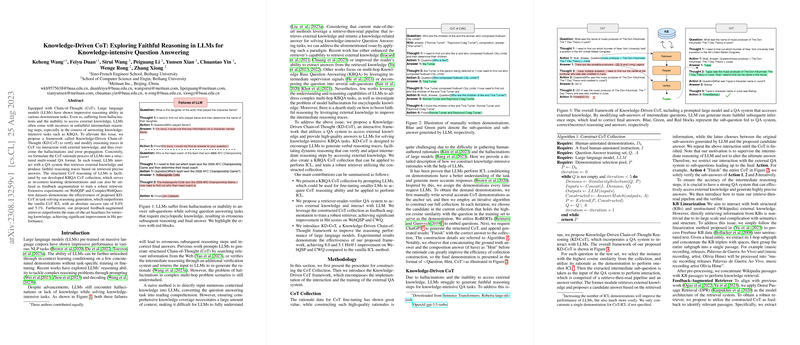

The paper "Knowledge-Driven CoT: Exploring Faithful Reasoning in LLMs for Knowledge-intensive Question Answering" addresses a critical challenge facing LLMs: their propensity for hallucinations and error propagation in the context of knowledge-intensive question answering (QA) tasks. This paper introduces the Knowledge-Driven Chain-of-Thought (KD-CoT) framework, aimed at enhancing the fidelity of LLM's reasoning by incorporating interactions with a QA system that retrieves and verifies external knowledge.

Background and Motivation

LLMs, when equipped with Chain-of-Thought (CoT) prompting, have demonstrated significant potential in various complex reasoning tasks. Traditional CoT methods drive models to generate detailed intermediate reasoning steps, facilitating a structured approach to problem-solving. Yet, these models often suffer from hallucinations—fabrications or errors—in the intermediate steps due to their closed nature and lack of access to dynamic, up-to-date external knowledge. Existing solutions involve simple retrieval mechanisms or independent verification systems but fall short in multifaceted, multi-hop reasoning scenarios.

Proposed Methodology

The KD-CoT framework is designed to address these issues by formulating the reasoning process of LLMs into a structured multi-round QA format. Here’s how the process works:

- Structured CoT Reasoning: Leveraging in-context learning (ICL) demonstrations curated from a KBQA CoT collection to guide LLMs in producing structured intermediate steps.

- QA System Integration: This involves a retriever-reader-verifier architecture. The retriever adeptly accesses external knowledge, the reader processes and condenses this information, and the verifier ensures the accuracy of the generated reasoning steps.

These processes aim to validate and correct the intermediate reasoning, thereby reducing hallucinations and improving overall answer accuracy.

Results and Discussion

The empirical evaluation on WebQSP and ComplexWebQuestion (CWQ) datasets showcases KD-CoT’s substantial performance gains. Notably, KD-CoT outperformed vanilla CoT ICL by absolute success rates of 8.0% on WebQSP and 5.1% on CWQ in Hit@1 metric, demonstrating the effectiveness of this interactive approach. The results are indicative of the following:

- Enhanced Intermediate Reasoning: The integration of an external QA system to verify and refine reasoning steps significantly mitigates hallucinations.

- Improved Retrieval Accuracy: The feedback-augmented retriever in KD-CoT achieved a notable improvement in hit and recall rates, underscoring the importance of precise knowledge retrieval in enhancing LLM performance.

Implications and Future Work

Theoretical and practical implications of KD-CoT are broad:

- Theoretical: This framework provides a robust methodology for integrating structured and unstructured knowledge sources dynamically into LLMs, pushing the boundary of automated reasoning.

- Practical: In real-world applications, especially those requiring accurate and up-to-date information retrieval, KD-CoT could be integral to developing reliable AI systems for domains such as legal research, medical diagnosis, and academic research.

Despite its advantages, the framework is not without limitations. The reliance on external QA systems can introduce latencies, and the complexity of interactions can be computationally intensive. Furthermore, the dynamic nature of knowledge domains means continuous retraining and updating of QA systems are necessary.

Future research could explore optimizing the retriever-reader-verifier interaction to minimize computational overhead. Additionally, developing more advanced, context-aware retrieval mechanisms could further enhance the fidelity of reasoning. Integrating KD-CoT with real-time data sources and more scalable models would also be valuable directions for future investigations.

Conclusion

This paper presents significant advancements in improving the reliability of LLMs for knowledge-intensive tasks. By marrying structured reasoning with dynamic interaction with external knowledge sources, KD-CoT exemplifies a promising direction for enhancing the accuracy and reliability of AI-driven reasoning processes. The experimental results affirm the potential of the KD-CoT framework to redefine how LLMs handle complex, multi-faceted queries, paving the way for future developments in artificial intelligence.

Reference to the original paper and its repository has been maintained for those interested in delving deeper into the implementation specifics:

- The code and data for KD-CoT are available at: https://github.com/AdelWang/KD-CoT/tree/main.