Unveiling YOLO-World for Open-Vocabulary Object Detection

Enhancing YOLO with Open-Vocabulary Capabilities

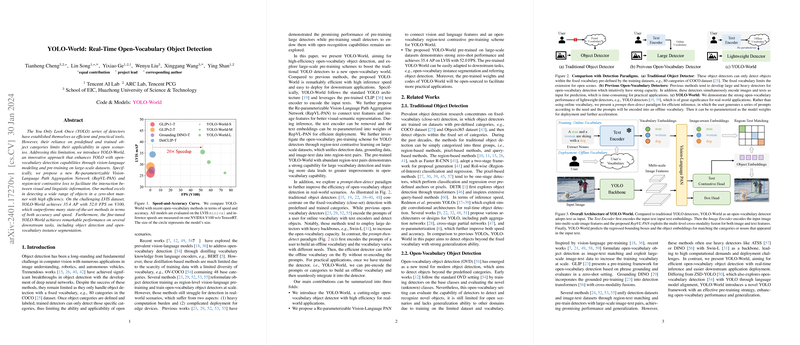

The YOLO (You Only Look Once) series have been extensively utilized in practical scenarios due to their efficiency in object detection. A recent advancement has been made with the introduction of YOLO-World which elevates the traditional YOLO detectors by incorporating vision-LLMing, allowing it to detect objects beyond predefined categories. Through large-scale pre-training, YOLO-World has demonstrated superior accuracy and speed, specifically boasting an Average Precision (AP) of 35.4 while maintaining a frame rate of 52.0 FPS on the NVIDIA V100 platform, as tested on the challenging LVIS dataset.

Technical Innovations

A key innovation in YOLO-World is the Re-parameterizable Vision-Language Path Aggregation Network (RepVL-PAN). This novel architecture facilitates the fusion of visual and linguistic information, ensuring better interaction between the two. Alongside this, the region-text contrastive loss has been commissioned to boost open-vocabulary detection capabilities. A significant takeaway is the model's architecture which follows the standard YOLO blueprint yet significantly benefits from the injection of CLIP-based text encoder for enriched visual-semantic representations.

Pre-training and Precedence Over Existing Solutions

Pre-training has played a pivotal role in YOLO-World’s development. The paper presents a unique training scheme that assimilates detection, grounding, and image-text data into region-text pairings, leading to marked improvements in open-vocabulary capability. Significantly, when pitted against comparable state-of-the-art methods, YOLO-World outperforms them not only in accuracy but also in terms of inference speed, offering a 20x speedup for real-world applications.

Open-vocabulary Detection and Downstream Tasks

YOLO-World transcends the limited lexicon of objects encountered in traditional object detection, adapting seamlessly to various downstream tasks such as object detection and instance segmentation. Its zero-shot performance reveals strong generalization abilities, and the model's adaptability is further underscored by its remarkable performance in open-vocabulary instance segmentation.

Conclusion and Availability

The paper concludes by positioning YOLO-World as a ground-breaking tool for real-world applications requiring efficient and adaptive open-vocabulary detection. What makes YOLO-World particularly entrancing to the research community is the commitment to open-source its pre-trained weights and codes, thereby broadening the horizons for practical applications of large-vocabulary, real-time object detection.