Object Detection Using YOLO-MS: A New Approach to Multi-Scale Representation Learning

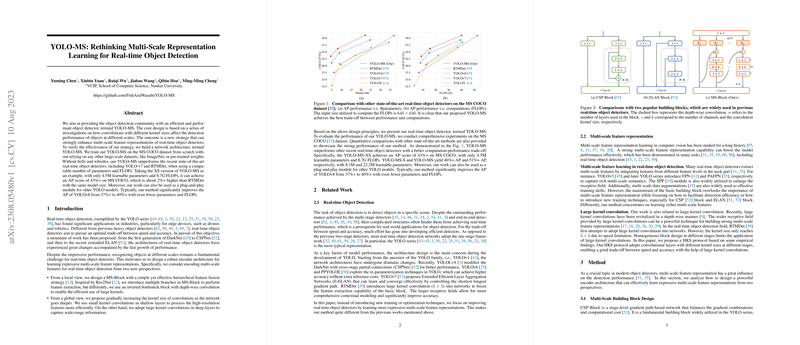

The paper "YOLO-MS: Rethinking Multi-Scale Representation Learning for Real-time Object Detection" presents an innovative approach to improving real-time object detection by focusing on multi-scale feature representation. The authors introduce YOLO-MS, a new architecture that revamps the YOLO framework by re-evaluating the influence of convolutional layers of varying kernel sizes on object detection performance across different scales.

Technical Contributions and Results

The central tenet of YOLO-MS lies in the optimization of multi-scale feature representations through a thoughtful combination of convolutional layers with distinct kernel sizes. Through their experimentation, the authors identify strategic enhancements that enable a robust interpretation of object features at varying scales, a crucial need in real-time detection scenarios. They investigate the performance impacts of convolutions using different kernel sizes and propose a multi-scale block, referred to as MS-Block, which integrates hierarchical feature fusion tactics inspired by Res2Net, but enhanced with depth-wise convolutions for leveraging large kernels efficiently.

YOLO-MS is evaluated on the MS COCO dataset, and the authors provide compelling quantitative results demonstrating its superiority over contemporary models like YOLO-v7 and RTMDet. Notably, the YOLO-MS-XS variant, with only 4.5M learnable parameters and 8.7G FLOPs, achieves an Average Precision (AP) score exceeding 43% on the MS COCO dataset, surpassing RTMDet's performance by over 2%.

Methodological Innovations

The paper emphasizes two primary methodological contributions:

- MS-Block Design: The paper introduces an MS-Block design, which uses an inverted bottleneck with depth-wise convolution to support efficient large-kernel operations. This design architecture is set apart from similar approaches by leveraging a multi-branch schema while ensuring computational overhead remains manageable.

- Heterogeneous Kernel Selection (HKS) Protocol: The authors suggest a HKS protocol that varies kernel sizes throughout different stages of the network, optimizing them according to feature resolution. Smaller kernels are used in shallower layers to maintain efficiency, while larger kernels capture broader context in deeper layers, improving the detection of large objects without overwhelming smaller object details.

Implications and Future Directions

The implications of YOLO-MS extend to multiple areas within real-time object detection frameworks, potentially offering significant advancements in application fields where speed and accuracy remain contentious. This includes not only industry uses in edge devices but also potential applicability in scenarios requiring adaptive detection across varied object scales and complexities.

As for future directions, the paper identifies specific challenges that remain, such as the relative inference speed of YOLO-MS compared to its predecessors. Addressing this gap could involve further optimizing the utilization of large-kernel convolutions or simplifying hierarchical configurations to boost processing efficiency.

In summary, the paper establishes a formidable framework in YOLO-MS, providing a foundation for advances in real-time object detection systems. The methodologies proposed encourage further exploration and application across related detection challenges, underscoring the importance of flexible model architectures that can effectively balance performance trade-offs of feature size and computational load.