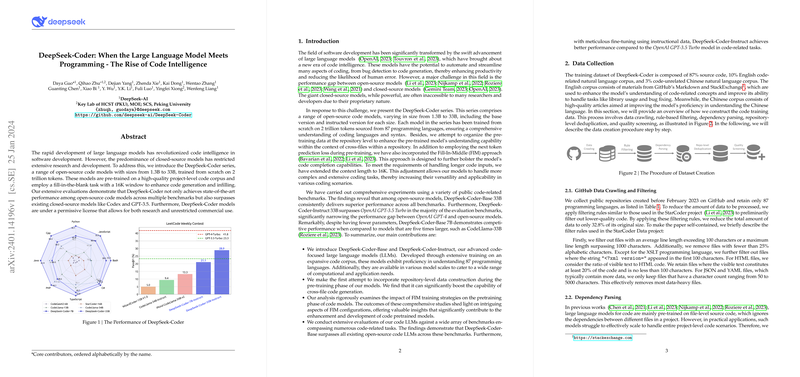

This paper introduces DeepSeek-Coder, a series of open-source LLMs specifically designed for code-related tasks. The models range in size from 1.3B to 33B parameters and are trained from scratch on a massive 2 trillion token dataset. The primary motivation is to bridge the performance gap between powerful but proprietary closed-source models (like GPT-4) and existing open-source code models, making state-of-the-art code intelligence more accessible for research and commercial use.

Key Innovations and Training Details:

- Data Curation: The training data comprises 87% source code (across 87 programming languages), 10% English code-related text (GitHub Markdown, StackExchange), and 3% Chinese text. A rigorous data processing pipeline was employed:

- Crawling & Filtering: Initial collection from public GitHub repositories followed by rule-based filtering (line length, alphabetic character ratio, etc.) similar to StarCoder, significantly reducing data volume.

- Dependency Parsing: A novel approach using topological sort (Algorithm 1) based on inferred dependencies (e.g.,

import,include) to structure code at the repository level. Files are concatenated in an order respecting dependencies, aiming to improve the model's understanding of project-level context. File path information is included as comments. - Repo-Level Deduplication: Near-deduplication is applied to entire concatenated repositories (rather than individual files) to preserve structural integrity while removing redundancy.

- Quality Screening & Decontamination: Further filtering using compilers, quality models, and heuristics. N-gram filtering is used to remove potential contamination from benchmark datasets like HumanEval and MBPP.

- Training Objectives:

- Next Token Prediction: Standard LLMing objective.

- Fill-in-the-Middle (FIM): Employed to enhance code completion and infilling capabilities. Ablation studies (Figure 3) led to choosing a 50% FIM rate using the PSM (Prefix-Suffix-Middle) mode over SPM or MSP, balancing infilling performance and code completion ability. FIM is applied at the document level before packing, using special sentinel tokens.

- Model Architecture: Based on the DeepSeek LLM architecture, these are decoder-only Transformers using Rotary Position Embeddings (RoPE) and SwiGLU activations. The 33B model incorporates Grouped-Query Attention (GQA) for efficiency. FlashAttention v2 is used for faster attention computation. Specific hyperparameters (hidden size, layers, heads) are provided for each model size (Table 3).

- Long Context: RoPE parameters were adjusted using linear scaling (factor 4, base frequency 100k) and additional training (1000 steps) to extend the effective context window to 16K tokens, beneficial for repository-level understanding.

- Instruction Tuning: Base models were fine-tuned on high-quality, Alpaca-formatted instruction data to create the

DeepSeek-Coder-Instructvariants, improving their ability to follow instructions and engage in dialogue (Figure 4).

Evaluation and Results:

DeepSeek-Coder models were evaluated extensively across various benchmarks:

- Code Generation:

- HumanEval (Multilingual) & MBPP: DeepSeek-Coder-Base 33B achieved state-of-the-art results among open-source models, significantly outperforming CodeLlama-34B (Table 4). DeepSeek-Coder-Instruct 33B surpassed GPT-3.5-Turbo on HumanEval.

- DS-1000: Demonstrated strong performance in generating code for practical data science tasks across libraries like Pandas, NumPy, PyTorch, etc. (Table 5).

- LeetCode Contest: A new benchmark created from recent LeetCode problems. DeepSeek-Coder-Instruct 33B outperformed GPT-3.5-Turbo, showing strong problem-solving skills. Chain-of-Thought (CoT) prompting further improved performance on complex problems (Table 6).

- Fill-in-the-Middle (FIM): On single-line infilling benchmarks, DeepSeek-Coder models (even the 1.3B version) showed competitive or superior performance compared to StarCoder and CodeLlama, validating the FIM training strategy (Table 7).

- Cross-File Code Completion: Using the CrossCodeEval benchmark, DeepSeek-Coder-Base 6.7B outperformed similar-sized models, especially when augmented with retrieval. An ablation paper confirmed the benefit of repository-level pre-training (Table 8).

- Program-based Math Reasoning: Using the PAL method on benchmarks like GSM8K and MATH, DeepSeek-Coder models showed strong mathematical reasoning capabilities when generating code to solve problems (Table 9).

DeepSeek-Coder-v1.5:

The paper also introduces DeepSeek-Coder-v1.5 7B, created by continuing the pre-training of a general DeepSeek-LLM 7B base model on a mix rich in code (70%), math, and code-related natural language. This version used only next-token prediction with a 4K context. Compared to the original DeepSeek-Coder 6.7B, v1.5 showed significantly improved natural language understanding and math reasoning performance, with only a minor dip in coding benchmarks (Table 11), suggesting that grounding code models in strong general-purpose LLMs is beneficial.

Conclusion:

DeepSeek-Coder models represent a significant advancement in open-source code LLMs, achieving state-of-the-art performance through careful data curation (especially repository-level processing), optimized training strategies (including FIM), and large-scale training (2T tokens). The models excel in code generation, infilling, cross-file understanding, and math reasoning. The instruction-tuned versions are highly capable dialogue agents for coding tasks. The development of v1.5 highlights the value of building specialized models on strong generalist foundations. The models are released under a permissive license for both research and commercial use.