Evaluation of Multimodal LLM Reasoning with the Mementos Benchmark

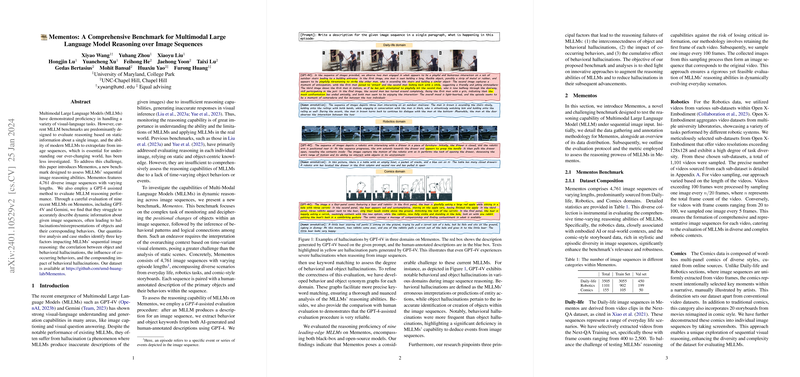

The paper presents "Mementos," a new and comprehensive benchmark designed to assess the capabilities of Multimodal LLMs (MLLMs) in reasoning over image sequences. Existing benchmarks mainly focus on single-image reasoning capabilities, lacking the evaluation of time-varying object behaviors or events in real-world scenarios. This oversight limits a deeper understanding of the reasoning capabilities of MLLMs. The Mementos benchmark addresses this gap by incorporating 4,761 diverse image sequences with varying lengths sourced from domains such as daily life, robotics, and comics.

The paper's contribution is twofold. First, it introduces a novel benchmark for sequential image reasoning, emphasizing the assessment of MLLMs' ability to interpret dynamic contexts and sequential visual information. Second, it uses a GPT-4-assisted evaluation method that considers possible hallucinations in MLLM outputs, specifically object and behavioral hallucinations. Hallucinations here refer to inaccuracies in descriptions where MLLMs may invent actions or misrepresent objects and their behaviors within a sequence.

Key Findings

The paper examines the performance of nine recent MLLMs, including GPT-4V and Gemini, using the Mementos benchmark. The results reveal that MLLMs struggle significantly with accurately describing dynamic information from image sequences, frequently leading to hallucinations. Particularly, the paper identifies three primary factors contributing to reasoning failures in MLLMs:

- Correlation between Object and Behavioral Hallucinations: MLLMs often produce errors due to incorrect object identification, which cascades into behavior misinterpretations.

- Impact of Co-Occurring Behaviors: Behaviors that frequently occur together can cause models to infer nonexistent behaviors in sequences, which highlights a pattern-driven rather than a context-driven reasoning approach.

- Compounding Impact of Behavioral Hallucinations: Initial misinterpretations can accumulate, causing subsequent frames or events to be inaccurately described, exacerbated by the temporal nature of sequences.

Implications and Future Developments

This work highlights significant practical and theoretical challenges in developing MLLMs with robust reasoning capabilities across sequences of images. Practically, the findings suggest the need for more refined approaches in training MLLMs to better handle dynamic, context-rich, and sequential data without falling into common pitfalls of hallucination. Theoretically, these results prompt a reevaluation of how current MLLMs understand temporal sequencing and logical connections, signaling a need for improved architectural designs that account for such complexities.

Future research could expand the Mementos benchmark by introducing more varied data, such as first-person navigation experiences or sequential medical datasets, potentially increasing the benchmark's complexity and relevance. Additionally, refining the evaluation process beyond keyword matching to consider deeper semantic understanding could lead to advancements in assessing MLLM capabilities. Furthermore, targeted strategies focusing on reducing hallucinations and enhancing reasoning abilities could significantly benefit both the development and application of MLLMs in diverse fields like robotics and interactive systems.

In summary, this paper presents a critical advancement in evaluating MLLMs and offers insightful directions for enhancing the reasoning capabilities of future AI models.