Overview of Autoregressive Image Models

The progression of large-scale vision models has been prominently influenced by the guiding principles established in the natural language processing domain. In particular, the strategy of pre-training large neural networks to generate versatile features has translated into significant advancements. A paper explores this approach by introducing a series of vision models, collectively known as Autoregressive Image Models (AIM), which are pre-trained using an autoregressive objective that mirrors the methodology applied in the development of LLMs.

Key Findings and Performance Scaling

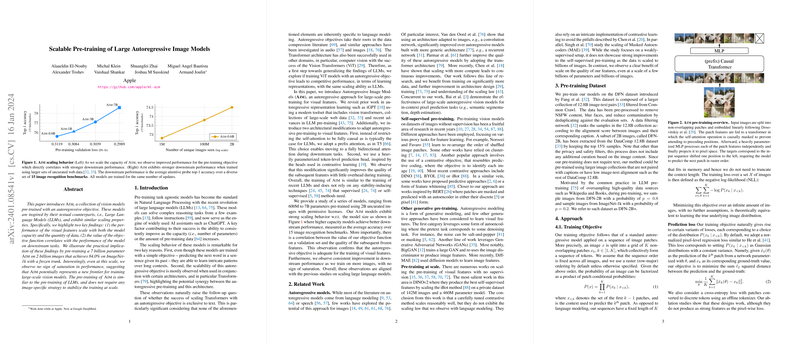

AIM's core findings reveal two critical aspects: the model's performance scales in conjunction with its capacity and the quantity of data it is trained on, and the value of the objective function during training is a predictor for the model's effectiveness on downstream tasks. The research demonstrates these principles by training a model with 7 billion parameters on a dataset of 2 billion images. Remarkably, no saturation in performance improvement was detected, suggesting potential for future advancements in scaling up vision models.

Training Insights and Generalizability

Comprehensive analysis indicates that the success achieved by AIM does not necessitate specialized stabilization techniques specific to vision tasks. Instead, the AIM framework can be broadly applied, akin to the training processes of LLMs. Furthermore, evaluations across a diverse array of 15 image recognition benchmarks show that the AIM models attain robust performance, further supporting the utility of the autoregressive pre-training objective in preparing models for varied visual representations.

Conclusions and Future Directions

AIM's methodology represents a promising frontier in the development of large-scale vision models, offering a new perspective on the scalability and potential of leveraging extensive uncurated datasets. The absence of performance saturation points to a fertile ground for future exploration, where even more expansive models may be trained for extended periods to achieve unprecedented levels of visual understanding. The contributions of AIM position it as a landmark development, inspiring ongoing research in scalable and efficient vision models that can capitalize on the vast expanse of available imagery without predispositions towards specific visual content.