Introduction

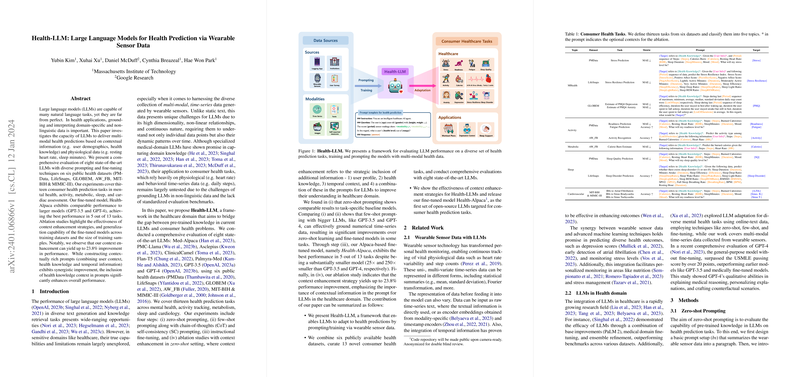

LLMs have shown remarkable competencies across various text generation and information retrieval tasks. In healthcare, however, their abilities to process multi-modal data, especially time-series physiological and behavioral data from wearable sensors, are yet to be thoroughly examined. The paper explores this matter by proposing Health-LLM, a framework comprehensively testing the effectiveness of eight cutting-edge LLMs in health predictions augmented with wearables data. The paper ensures robustness by incorporating diverse prompting and fine-tuning techniques and evaluates performance across thirteen key health prediction tasks.

Methodology

Health-LLM's evaluation of the models' performance on health prediction tasks is two-pronged: through zero-shot and few-shot prompting and through instructional fine-tuning. Zero-shot prompting assesses models' in-built knowledge without additional training, while few-shot prompting offers the models a few illustrative examples to learn from. Instructional fine-tuning goes a step further by adapting the whole model to the task-specific data. The paper also examines the benefit of context enhancement in prompts, where supplementary information such as user demographics or health knowledge is strategically included for performance refinement.

Findings

The paper uncovered that zero-shot prompted LLMs tend to perform on par with designated task-specific baseline models. Few-shot prompting, particularly with elaborate models such as GPT-3.5 and GPT-4, demonstrated a noteworthy understanding of the physiological time-series data. The fine-tuned Health-Alpaca model, despite being significantly smaller in size than its GPT counterparts, recorded the best performance in many tasks, underscoring the potential efficiency of LLMs when fine-tuned with health-specific data. Context enhancement was another highlight, with the inclusion of additional context in prompts leading to substantial gains, particularly when health knowledge was involved.

Implications and Ethical Considerations

The implications of this paper are profound for the healthcare domain. The research suggests that LLMs possess a largely untapped potential for predicting health outcomes from wearable sensor data, which could revolutionize patient monitoring and care. However, the authors flag critical ethical considerations such as privacy protection, bias mitigation, and the prevention of "model hallucination," where the model might generate convincing yet incorrect predictions. They call for thorough ethical considerations, enhancing the safety and reliability of LLMs in health applications before their real-world implementations.

In conclusion, this paper paves the way for future research dedicated to refining models' reasoning, enhancing personalization, and addressing data security in healthcare settings. The practical deployment of Health-LLMs could mark a significant step towards achieving AI-driven personalized healthcare but must be navigated responsibly.